We mention L-band a lot here at SparkFun, from our RTK Facet L-band to our RTK mosaic-x5, which boasts triband reception. Recently on the blog, we discussed the basics of what it is, but didn't get to why we use it - we saved that for today! Buckle in for a history lesson.

L-band has been crucial in the development of GPS, helping to transform it from a military tool to the ubiquitous navigation aid we rely on today. Today we'll chart the journey of L-band, from its early adoption in GPS systems to its role in the high-tech positioning hardware we use now. We'll explore how this slice of the radio spectrum has been the backbone of GPS, supporting its growth and ensuring that getting lost is, well, a thing of the past. Ready to get a clearer signal on how the L-band has steered the course of GPS history? Let’s dive in!

L-Band and GPS

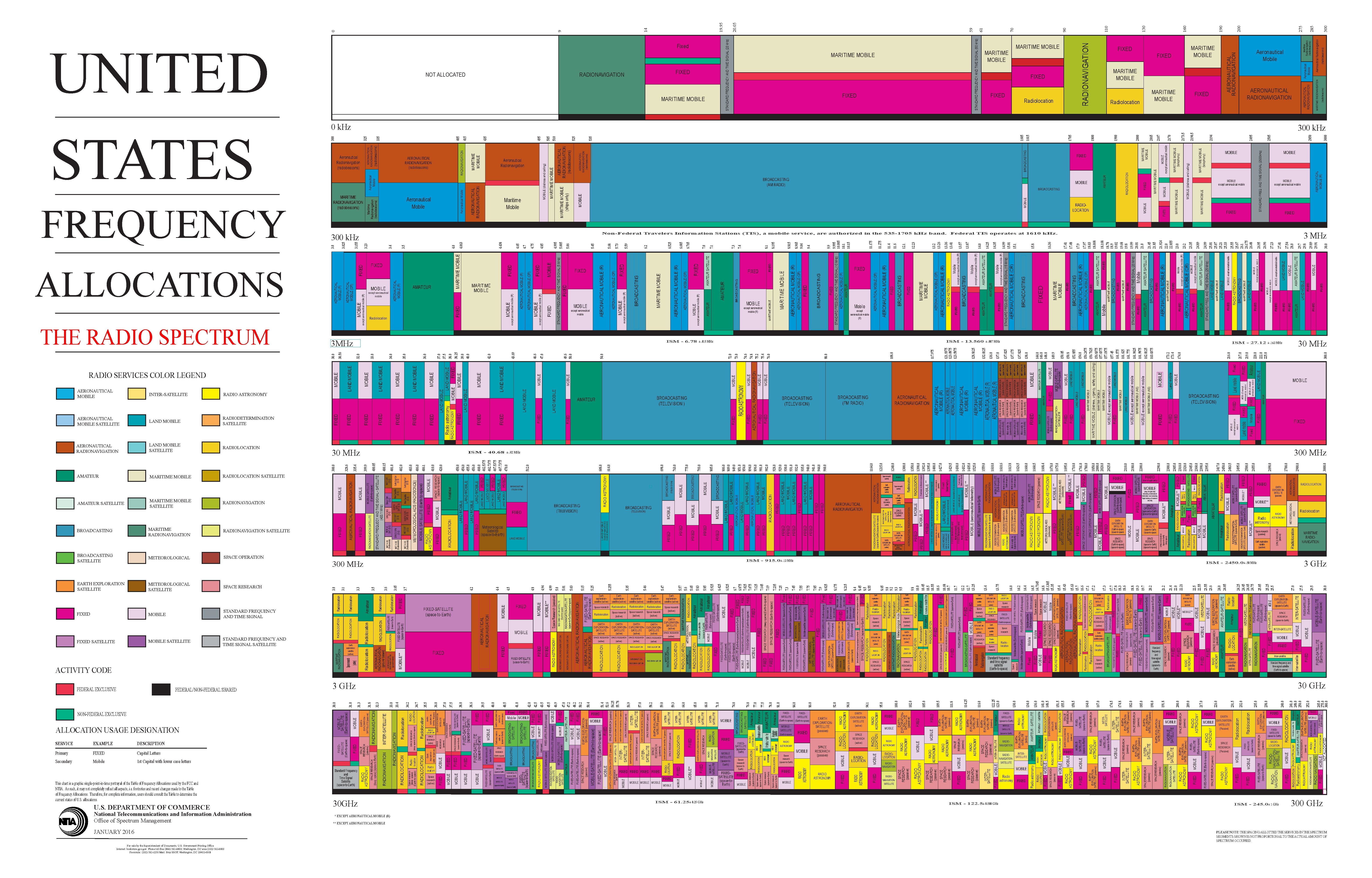

We use L-Band for the United States’ satellite based navigation system, GPS. "L-Band" is a descriptor for the continuous band of radio frequencies from 1 GHz to 2 GHz. So if your radio is transmitting at 1575.42 MHz, you are transmitting on L-Band. The United States' GPS is transmitted on frequencies ranging from 1176.45 MHz (L5) to 1575.42 MHz (L1), while other GNSS constellations such as the European Union's GALILEO and China's BEIDOU, have their own frequencies within the L-Band. This brings up a question: Why do all of these different GNSS systems, from all different countries, use L-Band? What makes it such a desirable band for GNSS?

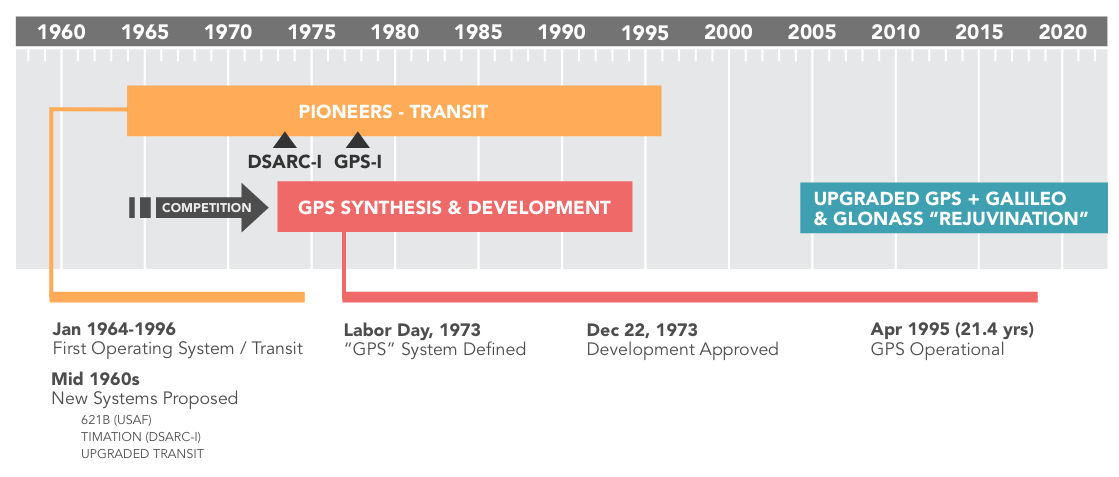

A Very Brief History of Satellite Navigation

In 1957, the USSR launched the world’s first satellite, Sputnik 1. Sputnik contained a small radio station called the D-200, designed to transmit temperature and pressure information measured by sensors in the body of the satellite. The D-200 ran on three silver-zinc batteries, which kept it transmitting for 21 days, or roughly 314 orbits around the Earth. This telemetry data was transmitted using a very simple and robust protocol; Threshold Encoding for the value, and On-Off Keying for the state. Scientists and engineers predetermined different thresholds of temperature and pressure that would be significant, then set up a bit pattern that corresponded to each threshold. The radio would use On-Off Keying to signal whether each threshold was met or unmet. On-Off keying is a literal communications protocol, where the presence of a carrier wave signifies a one, and its absence signifies a zero. The radio transmitted at 20.005 MHz as a primary, and 40.005 MHz as a supplementary channel. The choice of these specific frequencies reflected a balance between technical feasibility, the need for reliable data transmission, and the desire to facilitate widespread participation in the satellite's tracking and monitoring by both professional stations and radio amateurs worldwide.

The launch of Sputnik signaled (pun intended) the beginning of the space race, and scientists and radio enthusiasts around the world began tracking the signals it was transmitting. William Guier and George Weiffenbach of John Hopkins University’s Applied Physics Laboratory were two such scientists. As part of his Ph.D. dissertation in microwave spectroscopy, Guier had access to a high precision radio receiver. Using this receiver, and the nearby WWV Beltsville radio station’s 20 Mhz carrier signal, Guier and Weiffenbach were able to subtract the 20 Mhz WWV signal from the 20.005 MHz Sputnik signal, yielding a 5 KHz signal - which was in the audible frequency range. They could now “listen” to the transmissions from Sputnik. As they listened to the transmission, they realized that the signal was wandering as the craft passed overhead; when it was coming towards their location, the tone started out high, then became lower and lower, until they lost signal.

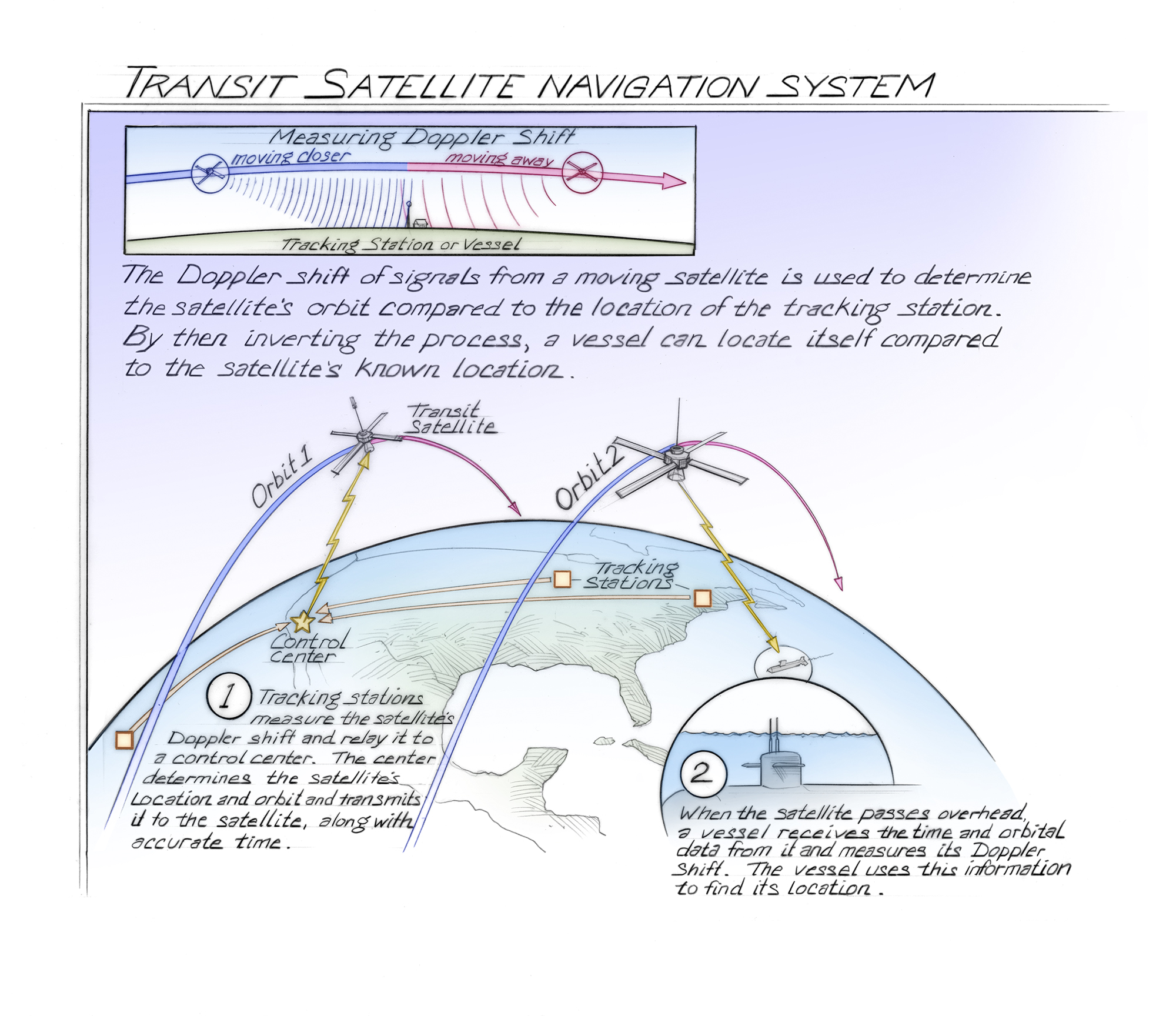

They quickly deduced that they were hearing the Doppler Shift of the signal, a modulation of frequency caused by relative velocities; by playing the audible signal through a wave analyzer, they created a frequency/time table. With this data, they were able to calculate the orbital period of the craft to a high accuracy. With some refinement, this calculation was able to determine all of the orbital parameters of the satellite. This research caught the notice of Dr. Frank McClure, the Director of APL’s Research Center. He realized that this method could be inverted; instead of determining the location of the satellite based on the receiver’s position, the inverted calculation could determine the receiver’s position based on the location of the satellite. Dr. McClure began working on this project, and by the Spring of 1958 had sent out a proposition for a grant project to create a satellite based navigation system for the US Navy; this project would eventually turn into the Navy Navigation Satellite System (NNSS).

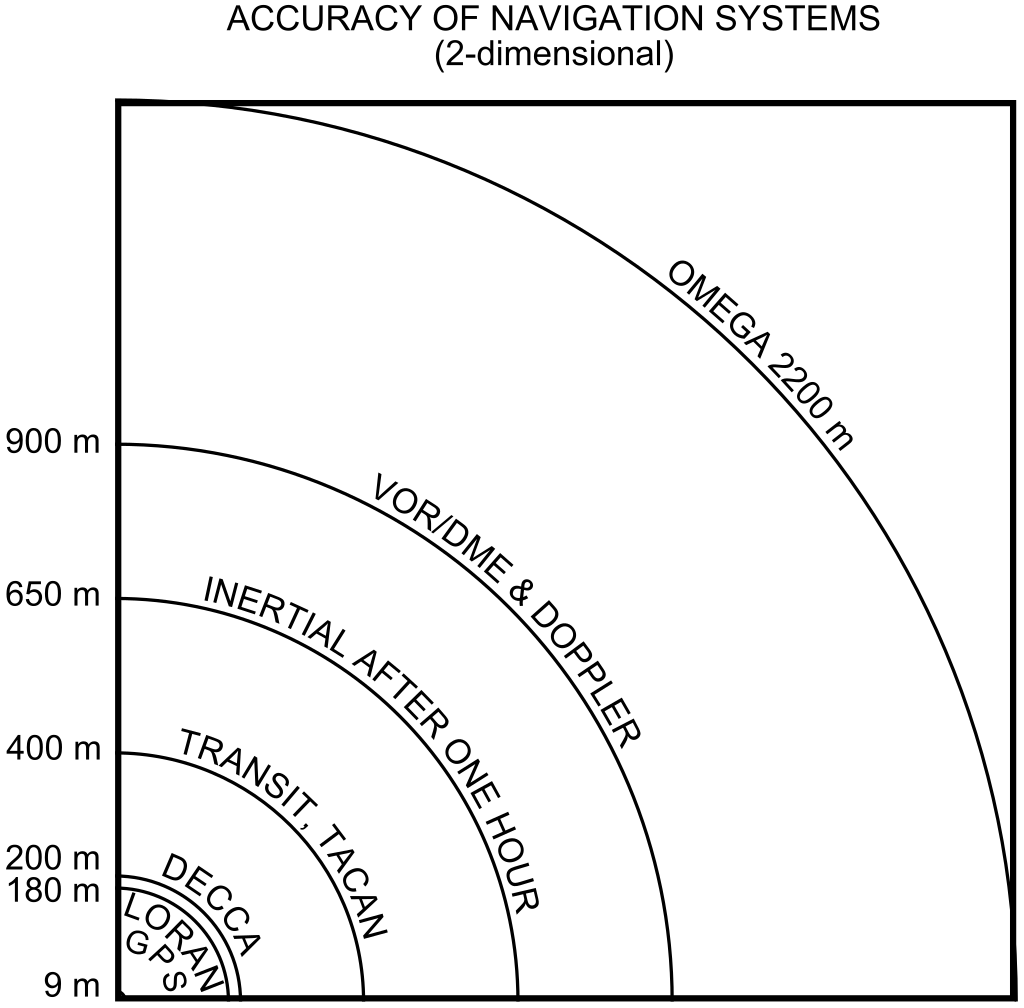

The NNSS used Transit satellites, and was the first satellite-based navigation system; design for the system began in 1958 and it entered service in 1964. The satellites used for the NNSS transmitted at both 150 MHz and 400 MHz, well below the L-Band, and receivers used the Doppler Effect to triangulate their own position. The primary use of the NNSS by the US Navy was to support its Polaris ballistic missile submarine fleet. However, the accuracy of the NNSS in its original form was low, and only provided a two dimensional latitude/longitude position. There were a total of five Transit satellites, so getting the highest accuracy position fix possible (200 meters) could take over an hour. To improve accuracy, a new component was needed: time. By broadcasting an additional timing signal to receivers, they could add the timing difference between their location and the Transit satellite to the position calculation. Two additional satellites were launched, called Timation satellites, in an effort to solve this problem.

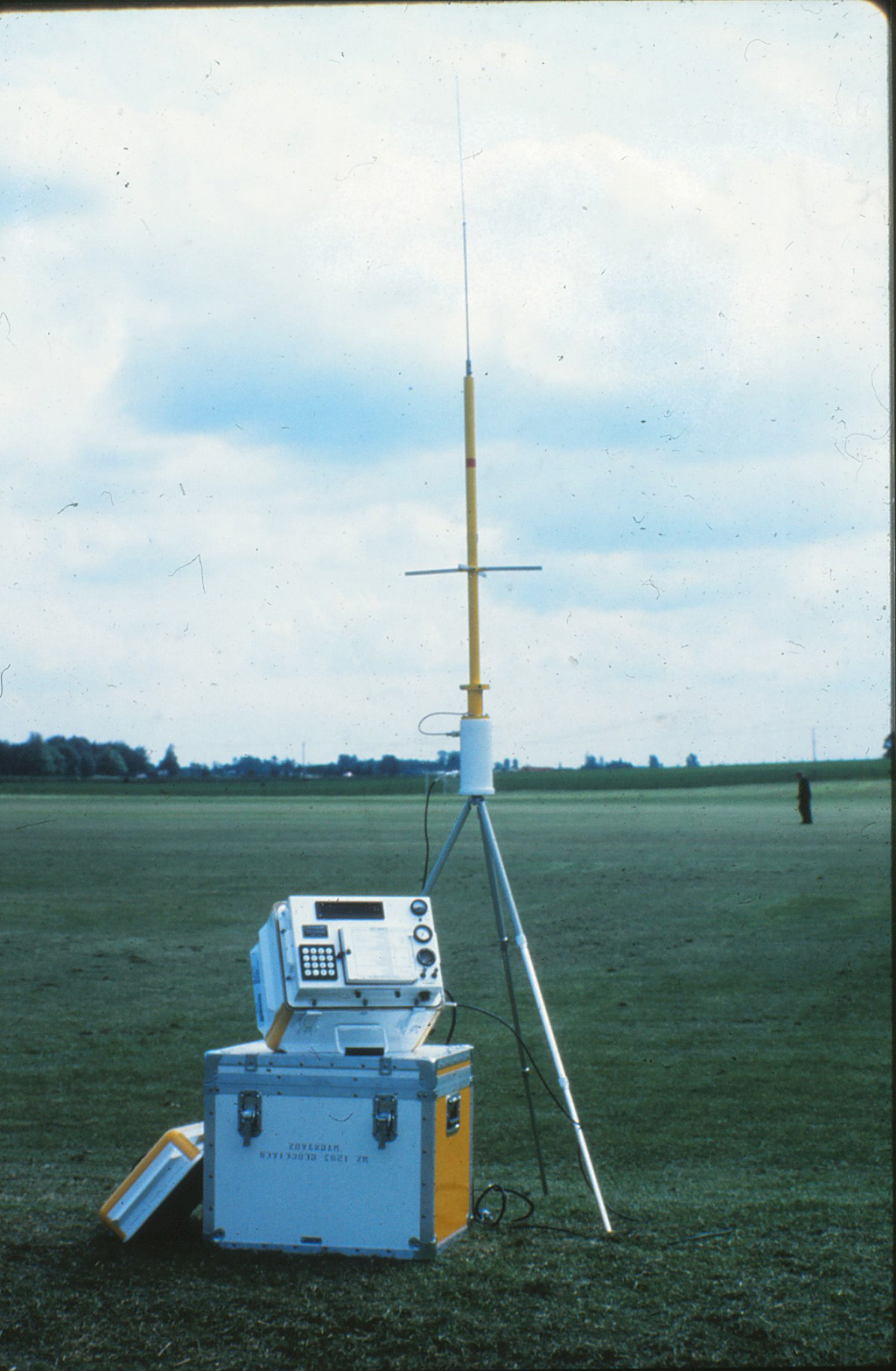

The Timation satellites proved to be successful, increasing the theoretical accuracy of the NNSS to 1 meter (though this took multiple days of data collection and expensive receiver equipment to do). In 1967 US Vice President Hubert Humphrey declared that the system was operational for civilian use, where it became heavily integrated into oceanic shipping industries, surveying, and civil engineering; after the release to the civilian world, it became commonly called “Transit” or “NavSat”. Transit had a huge impact on the surveying industry, revolutionizing the tools they used; surveyors now carried Doppler survey receivers, called georeceivers, which were used to establish the WGS 84 reference coordinate system that we still use to this day. In 1974, the Soviet Union began launching its own navigation satellites for a system called Parus. Parus uses the same principle (and similar frequencies) as NNSS, and is still in service as of 2024.

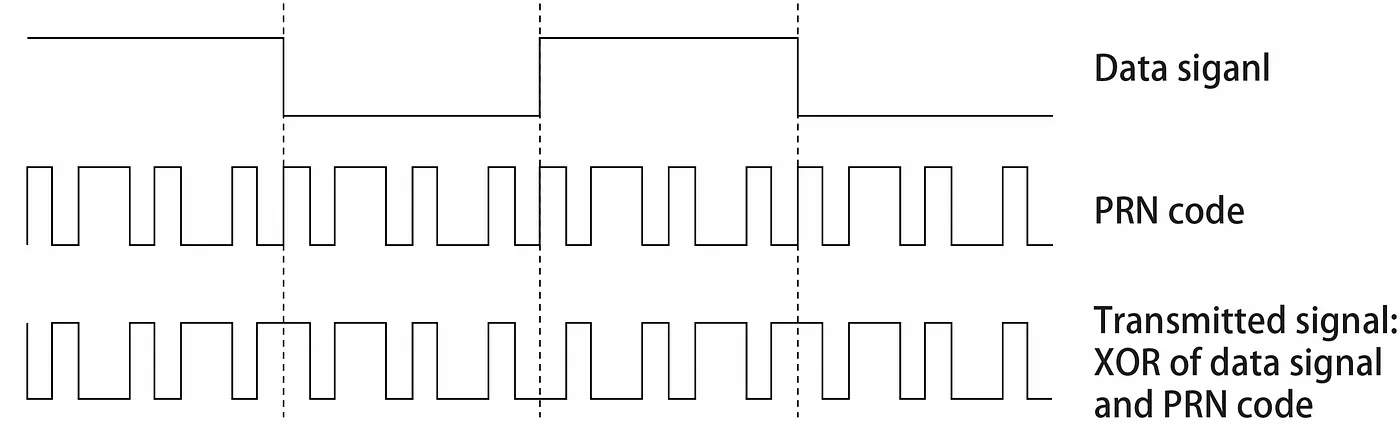

The NNSS was revolutionary for Naval navigation but provided low accuracy, long waiting periods between position fixes, and required expensive receiver equipment. To solve these issues, new systems were designed. An early (and important) attempt codenamed 621-B, launched in parallel with the Timation project, was designed by the US Air Force during the mid 60’s. 621-B explored many advanced digital signal transmission and processing possibilities, including the concept of pseudorandom noise to increase resistance to jamming and crosstalk with other navigation satellites. The idea with pseudorandom noise was that each satellite could be coded with a Psuedo Random Number (PRN); this number is used to introduce digital noise into the signal in a way that is repeatable without interfering with PRN’s from other satellites. This scheme essentially allows the frequency band to be multiplexed, with many transmitters all functioning in unison.

The Global Positioning System, or GPS, was a US Department of Defense project started in the early 1970’s to design a better satellite based navigation system. GPS design was based on lessons-learned from previous designs; the inverse orbital position calculations of Transit, the time corrections of Timation, and the concept of digital signal PRN from project 621-B. One of the key goals was to allow for a four dimensional fix for receivers; to determine latitude and longitude, altitude, and time.

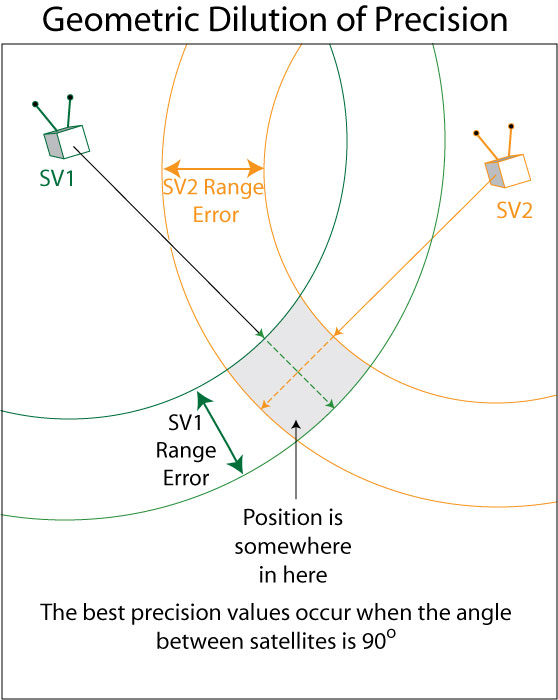

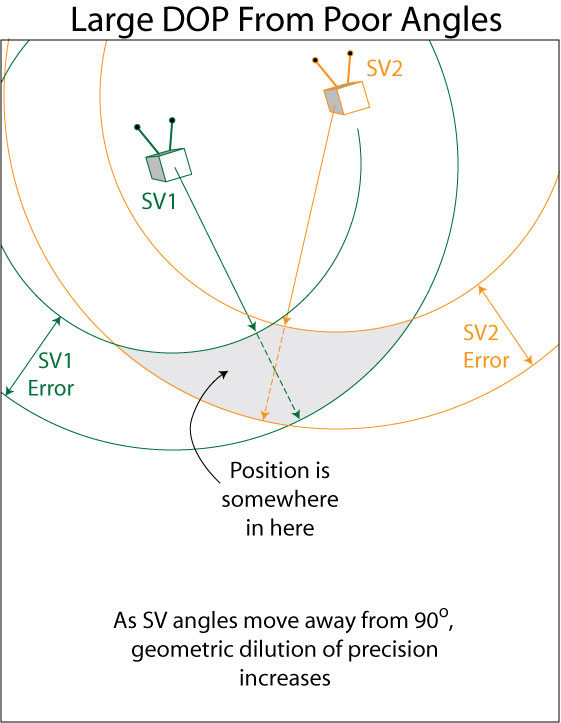

The first eleven GPS satellites were launched in 1978. The system was designed to have 24 operational satellites in total, arranged into six different orbital planes to maximize availability and precision. Having multiple orbital planes allows for better geometry between the receiver and the satellites; this geometry lowers the Dilution of Precision (DOP) of the position calculation. The launches were conducted in “Blocks”; the first three Blocks were manufactured by Rockwell International, a total of 39 satellites launched over 19 years. GPS became the first satellite based navigation system to use the L-Band for transmission. Since GPS was launched, many other GNSS constellations have been launched that also use L-Band. This brings us full circle back to the question: Why L-Band?

Why L-Band?

The selection of frequencies for GPS was a complex problem; the cost of satellites and receivers had to be low, the size of equipment small, and the signals detectable at a very long range, through a variety of environments. The Earth's ionosphere presented challenges, as well as rain and snow. The chief architects and developers of the system were Bradford Parkinson, Roger Easton, and Ivan Getting; but the engineering that decided the frequency to use was done by Frank Butterfield of the Aerospace Corporation.

Butterfield wrote a paper in 1976 titled "Operating Frequencies for the NAVSTAR/Global Positioning System" which laid out what frequency would work best for the GPS system, and why. A quote directly from that paper's Summary section explains it very clearly:

The NAVSTAR/GPS navigation signal frequency choices are constrained on the low side by performance limitations and by frequency allocation actions of earlier years. They are limited on the upper side by the cost of satellites in orbit.

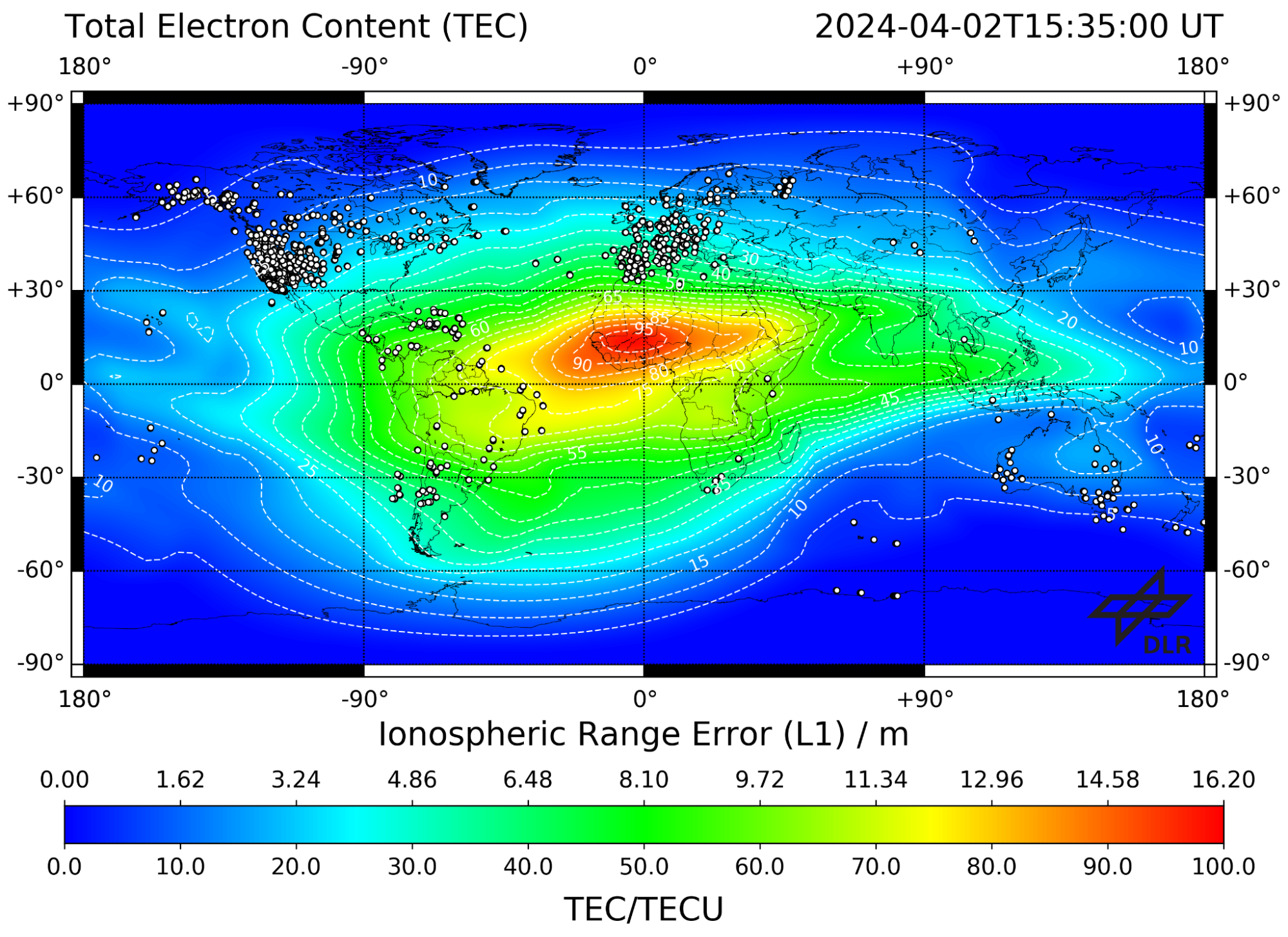

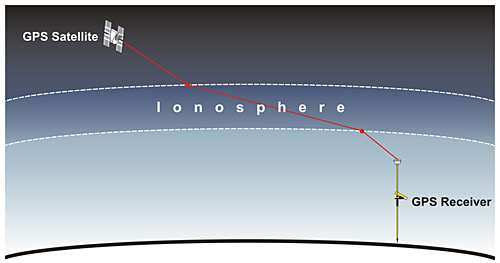

Most radio band allocations at that time were below the 1000 to 2000 MHz L-Band, such as FM radio (88-108 MHz), HAM radio (1.6-1240 MHz), Broadcast Television (44-294 MHz). Being higher than these widely used frequencies, there would be much less interference to sift through to find the weak GPS signals, which would allow for a more precise and accurate fix. An additional problem with lower frequencies is that they experience more pronounced effects of ionospheric propagation delay; that is, as they encounter the ionosphere, they experience reflection, refraction, diffraction, and scintillation. Some of the electromagnetic energy is reflected at the boundary layer between vacuum and ionospheric plasma, bouncing back into space.

This boundary layer also causes refraction and diffraction, altering the speed and direction of propagation, similar to a laser “bending” as it shines through an aquarium. Scintillation is an effect caused by density variations in the plasma; these density variations cause different amounts of refraction in the wave, causing parts of the wave to overlap and interfere constructively or destructively. This interference causes the electromagnetic power of the carrier wave to fluctuate.

- The Ionospheric Effect

- The ionosphere has an inconsistent electron density, and the electron density determines many properties of how the radio wave behaves as it passes through.

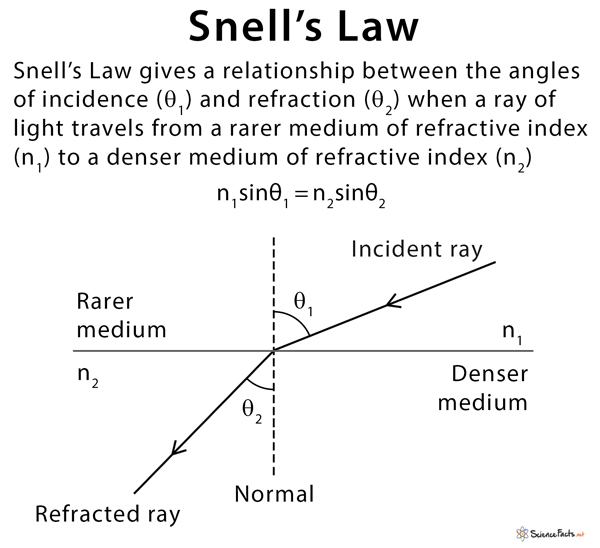

The interaction with the ionosphere causes signal group delay, a signal processing term for the propagation delay introduced by refraction. As the wave encounters a change in the refractive index of the medium it propagates through (such as moving from a vacuum into ionospheric plasma), the transmission velocity changes. This change of velocity changes the angle of propagation as well; this is a classic example of Snell’s law and Conservation of Momentum.

An interesting attribute of radio waves (and light in general) is that as they pass from one medium to another, the wavelength changes (because of the change in velocity) while frequency remains the same. It follows that the angle of refraction is inversely proportional to wavelength; as the wavelength of light decreases, the refractive index increases, causing the angle of refraction to become smaller. The lower the frequency chosen for GPS signals, the greater the refraction angle would be, the worse the accuracy of the system would be.

If lower frequencies introduce greater error, why not choose a very high frequency signal? Well, wavelength also determines how well the signal can pass through or around various media. Very high frequencies would not be able to penetrate well through miles of rain and snow, through tree canopies, through the roof of a car.

The system needed to be robust, able to function in all sorts of environments and locations. A secondary reason was cost of equipment. Receivers needed to be cheap enough for large scale production, and this project was already demanding a large budget given the planned 24 GPS satellites. Reliable high frequency components were very expensive, and were even more expensive to launch into space.

So there you have it, why L-band is used for Satellite Navigation - we needed to balance the priority for a high frequency that would be impervious to interference in the ionosphere and from our environment, while also balancing cost of equipment.

The process of allocating L-band frequencies for GPS signals was like a high-stakes game of Tetris, but instead of fitting blocks together, it involved fitting a myriad of global interests and technical constraints into a coherent spectrum policy. The L-band is a sweet spot for satellite communication due to its balance between range and precision. However, it's also prime real estate for other services, leading to a complex and often contentious allocation process. The process involved intricate negotiations and regulatory wrangling, often mediated by international bodies like the International Telecommunication Union (ITU). These organizations had to balance national security concerns, given GPS's origins as a military technology, with the burgeoning potential for civilian applications that could revolutionize navigation, agriculture, and more.

The allocation was not just about finding an empty slot in the spectrum; it was about ensuring that the frequencies used for GPS would not interfere with existing services and would be protected from future encroachments. This led to the establishment of specific frequency bands for GPS use, with careful consideration of technical parameters like signal strength and interference thresholds. As GPS technology evolved and became integrated into virtually every aspect of modern life, the importance of these allocated frequencies only grew. The process also set a precedent for how global spectrum allocation could be managed in the face of competing interests and technological advancements. In essence, the allocation of L-band frequencies for GPS was a masterclass in international cooperation, technical analysis, and forward-thinking policy-making.

Sources and Further Reading:

- THE EARLY DAYS OF SPUTNIK

- NSA Signals from Outer Space - listening to Sputnik

- The evolution of GPS satellites and their use today and GPs Signals, both from Penn State's GEOG 862: GPS and GNSS for Geospatial Professionals

- A look at the TRANSIT atellite system

- Sources of GNSS error

- GPS Signal Processing

- Atmospheric Electron Content and its effects

- The Ionospheric Effect

I also found a pdf of the original engineering design document for the radio system aboard Sputnik, but it is in Russian and the only translation system I’ve been able to get working with it is text only…

This source will likely not work for you; I ended up finding a VPN server in Russia to get access

You probably use GPS all the time, what else do you want to know about how it works? Let us know in the comments!

Thanks for the great description! I hadn't even been aware of the existance of NNSS, though in the mid 1980s, I'd looked at LORAN-C for a friend who was talking about a system similar to "LoJack".

This may be an "urban legend", thoough I heard it many years ago: when GPS first became available for civilian use, the Department of Defense (DoD) felt that the civilians using it should help pay for maintaining the constellation (IMHO, a quite reasonable position). Rather than trying to make them pay some sort of annual fee, they decided to make the manufacturers pay a licensing fee of $50 per unit manufactured (not unreasonable back when many such devices had a selling price of around $1000). They had severely underestimated the popularity of the system, and decided to drop the fee because the DoD couldn't handle the concept of actually turning a profit!

Thanks for the L band sharing !