Like most engineers, I was first introduced to FPGAs (Field-Programmable Gate Arrays) in college. By that time I was already experienced with programming, logic and circuit design, but even with those subjects under my belt, I found FPGAs wildly confusing. For a long time after taking that FPGA course, I was convinced that I could have developed a better course than my professor, and blamed most of my initial confusion on that. I felt that way right up until I tried to do exactly what my professor did: teach FPGAs.

On the surface, it seems like someone with lots of microcontroller experience would have no problem migrating to the world of programmable logic, but that is simply not the case. Even the most experienced programmer and developer will have problems understanding the concept behind things like "non-procedural programming." Just the concept of a circuit being "written" in a language like VHDL rather than built is enough to confuse the average DIYer.

Before reading on!

This post was written in 2013 and developing with FPGAs has come a long way since then. For those looking to get introduced to programmable logic with FPGAs we recommend trying out the Alchitry Au. To make getting started easier, the Alchitry Au board has full Lucid support, a built in library of useful components to use in your project, a A Getting Started Guide and a debugger! See the bottom of this post for SparkFun's FPGA product offerings.

As an engineer at SparkFun, it is my job to provide the "shortcuts" that a hobbyist or student might need to accomplish their goals in the electronic realm. So awhile ago, I set about trying to write a tutorial that would provide the base information and tools needed to get someone started using FPGAs. After ten pages of writing, I realized that teaching the concepts needed to understand and use an FPGA is a much bigger problem than I anticipated. In fact, it's such a large and complex problem that, after a couple of years, I still haven't found a complete way to introduce the uninitiated to the world of FPGAs.

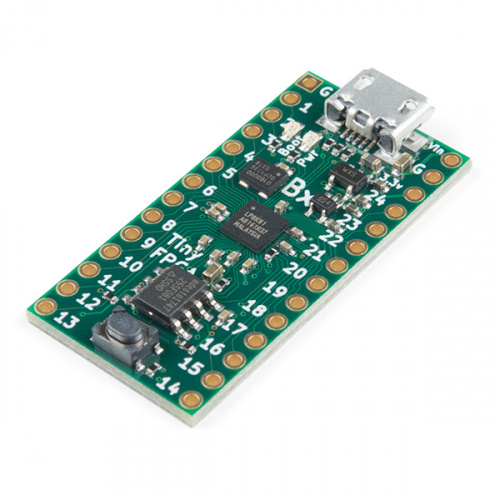

Luckily the DIY world has lots of very talented people working on the very same problem. In particular, Jack Gassett, the creator of the Papilio, and Justin Rajewski, creator of the Mojo have done the some great work creating boards and material to ease the transition into the world of FPGAs. These companies have a great deal of supporting information and tutorials that make them a perfect choiLce if one has a bit of electronics experience and wants to start working with FPGAs. The Papilio has tutorials at the Papilio site, and the Mojo has tutorials at the Embedded Micro site.

This post is meant to shine light on a massive gap in the open source community. To this day (2013 at the time of this post) FPGAs are still very, very, difficult to learn and teach. There are people who want to learn logic and FPGAs that are turned off of the subject because the barrier to entry is still so high. It's the open source community's responsibility to lower that barrier to entry, but so far, it's still high enough to scare away even seasoned electronics enthusiasts.

So what's to be done? Personally, I don't really have a good answer. People like Jack Gassett and Justin Rajewski have bravely taken up the cause of bringing FPGAs to the masses, but there is still much to be done. It will still be a while (or never) until FPGAs are as friendly as, say, the Arduino, but with enough talented geeks attacking the problem, we can at least make programmable logic less scary.

So what do you think the next step is? What would make FPGAs easier to learn and use? If you're already a developer, what made your learning experience easier?

Looking to get hands-on with FPGA?

We've got you covered!

Working in the FPGA industry for 2 years, I always learn something new every day.

To really learn FPGAs well, you really gotta start with the very basics. Logic blocks/designs, and then translate those basic blocks into HDL (Hardware Descriptive Language) code.

From here, it's not really about learning the FPGA itself, but more on the software that you need to use the program the chip. As people in the IRC channel know, I am more than willing to demo HDL and the FPGA design process using my Justin.tv account. People that watch do learn things, and I try to make it as interactive as possible. I'm there to teach people, not do marketing demos.

I personally think that Sparkfun should do something like the SIK, and do the SFIK (Sparkfun FPGA Inventor's Kit). Where you start off with the logic block boards, then go to a Papilio/Papilio Pro/Mojo v3 board.

I'm a bit biased being an FPGA designer myself, but I think getting FPGAs out there would be awesome. They are incredibly powerful pieces of hardware. Think of them as having the potential to be 100,000+ core processors which happen to run Finite State Machines rather than Machine Code.

From my experience I think there are 4 major barriers to FPGAs:

1) State Machines. I know a lot of us know what they are, but for someone coming into digital through Arduinos they are a key piece that gets missed. I think a basic course in digital focusing on discrete logic would help. Ultimately that is all an FPGA is really. I would suggest a class/tutorial that goes through State Machines and how to build logic with discrete chips would help. That is how I learned, and I constantly find myself doing mental comparisons of what is happening in code to what we did with discrete logic. Maybe Pete could give us a video on FSMs...

2) Parallelized Hardware Coding. FPGAs are a totally different beast when it comes to programming. I learned the basics in my Digital Logic class using the Altera schematic editor. This lets you drag and drop in basic logic blocks and wire them together. Once you understand how to build a circuit with FSMs like in number 1, then FPGA design with a schematic editor tool fairly easy to grasp. Then once you get the hang of wiring together blocks, you can start converting some or all of it to code, and see exactly how the circuit becomes code (at least I seem to remember being able to do that...). I think something that may help break the programming barrier would be something like an open source library of little code blocks (like the massive amount of example code that is available for Arduino). This would let people grab say a UART or a SPI interface, and drop it into their design saving them the expertise needed to do more complex tasks. Learning to wire together code blocks, and begin to modify them to do what you want is a great way to ease into the FPGA world. That is basically how I went from absolute noob to an FPGA designer, was working with predeveloped code and breaking it down and piecing it together.

3) SMD only. I know a lot of people are getting into SMD soldering, but FPGAs tend to be relatively fine pitch, and have a ton of pins. This is kind of a turn off, especially if you want to try prototyping. I think one solution is something similar to your old Spartan 3E dev board. A basic FPGA that can easily be integrated with a breadboard design. Be sure to have a good interface to the PC though. FPGAs don't tend to come with USB drivers, so maybe an open source JTAG adapter or something would really help.

4) Tools. I agree tools aren't readily available, but I really don't think anything can be done to fix that. To build an FPGA image you are going to need the proprietary software from the manufacturer to map your logic into their device. Perhaps the best thing here is to pick an FPGA which has freely available tools (I use Xilinx ISE for example) and work up a tutorial video that shows people how to do the basics, without worrying about details they don't need to know. For the more computer savy out there, don't forget that most all of the tools can also be run at the command line with scripts. That can make things a lot less painful. Maybe provide a simple Makefile that will make compiling an FPGA as easy as typing 'make'. Finally, for beginners maybe a simple development GUI to work with the code blocks I mentioned above could be written. Something basic that would let you drag and drop circuit blocks and then export the code, or simply call the real tools from the command line in the background would make things a lot easier.

Just my 2 cents, not sure if they are worth that much or not.

Just got a Mojo V3, and took a while to browse their site. My recommendation is if you want to start teaching about FPGAs, work with them, they have done an incredible job with the Mojo. I think they covered almost every hurdle I could think of, and a couple I had overlooked.

All valid points.

I worked with FPGAs in college and really enjoyed them. They are great for tasks requiring memory like working with video. I made a paint program with VGA output and touch screen input for one of my final projects. It was a mix of schematic and verilog blocks, I liked the flexibility you got developing for them. They are very different animals than microcontrollers though, and I haven't had the work opportunity to play with them since. Those dev boards might be a good way to step back in.

David Jones of EEVblog has just done a great introduction video on FPGAs

http://www.eevblog.com/2013/07/20/eevblog-496-what-is-an-fpga/

Here's a couple more good articles:

http://www.fpgarelated.com/showarticle/357/how-fpgas-work-and-why-you-ll-buy-one by Yossi Kreinin

http://www.programmableplanet.com/author.asp?section_id=2340&doc_id=265598& from Mike Field

Something I found really fun was getting my papilio pro to talk to an old LVDS laptop panel. It's a pretty easy project, at most you just need a power supply for the screen inverter, and you instantly have something to play with that would be hard/impossible with a normal processor. I found it much easier to "get" vhdl if I was using it to display patterns and graphics to the screen.

I studied Operations Research and some CS and my first few years in industry were as a programmer. I got interested in electronics as a hobby about 2 years ago and developed some decent microcontroller skills, largely thanks to SparkFun. A few months back, I decided that I wanted to see what all the FPGA buzz was about and I was lucky enough to get in on the ground floor with the Mojo as a kickstarter supporter.

I won't say that it was easy - the shift in thinking from writing traditional code to designing ciruits in Verilog was headache-inducing at first, but the tutorials and example code on the EmbeddedMicro site really helped to illuminate things for me. My first project was a simple scrolling display on an 8x8 LED matrix. I'm not sure whether I did it "right", but it works. The 50 Mhz clock speed of the Mojo leaves plenty of cycles for PWM, scanning rows, and scrolling the text very smoothly and evenly. I implemented a simple RAM block to hold the characters and a second block to hold the message. My next step is to wire up a serial connection via the on-board microcontroller to display any message input on the serial port.

The Mojo takes a lot of the annoying logistics out of the way so that you can jump right in and start using the FPGA. The tutorials walk you through getting the free ISE WebPack design software from Xilinx, and EmbeddedMicro provides a Loader program that uploads your finished .bin file to the microcontroller which "programs" the FPGA, all seamlessly. I had Hello World (i.e. an LED tied to a button) up and running within minutes of getting the software stack installed. I highly recommend the Mojo as a first step toward understanding and using FPGAs.

If you want to learn how to design using FPGAs you could always have a crack at my informal FPGA course - http://hamsterworks.co.nz/mediawiki/index.php/FPGA_course. The learning curve is still quite steep - "Programming without loops? WTF!", however it is a lot less steep than doing it alone.

You have to remember you are trying to make something useful out of half a million logic gates, and have it all work at tens or hundreds of megahertz. That would take the resources of a large company in the 80's or 90's....

You can also find some of my tinkering at http://hamsterworks.co.nz/mediawiki/index.php/FPGA_Projects

You know what would make FPGA's easier to learn? REAL WORLD USEFUL EXAMPLE PROJECTS!!!

I've taken a couple of runs now at trying to get comfortable with FPGA's and its hard to generate any interest in just creating an endless array of gates and outputs. Yeah, OK all this works...BUT: What can I do with it?

I'ld like to see some examples of Hobbyist implementation of FPGA's. Like maybe some sort of USB functionality to create a USB controller, or USB filter. Or some sort of an Ethernet filter/detector, etc

Sure, and FPGA can do almost anything if properly programmed, but what we need is some very simple, and USEFUL examples of something other than a bunch of switches which toggle LED's on/off.

Hobbyists need some sort of a functional payback if you expect to keep them engaged in the learning process. It's that simple!

$0.02

Do any of these have a native video out capability? Or would I have to build that from scratch for myself?

Hi there, it sounds like you are looking for technical assistance. Please use the link in the banner above, to get started with posting a topic in our forums. Our technical support team will do their best to assist you.

That being said, you will need to look at the documentation for the specific product you are interested in. Usually, the answer is no... you need to build that yourself.

Hi guys,

I am new to FPGA's and all I want to do is put a C code onto one. Can someone help me out with this?

I wrote a C program using pure C, there is no external libraries used or any other embedded code like assembly in it. I understand there are programs out there that can convert C code to be put on an FPGA. I'm not sure how to do this, or if I can simply copy & paste the C code into a new project on some IDE then put that on the FPGA (synthesize)? All my code does is run a calculation then compare it to a string. There are no peripherals or other hardware used. I'm hoping to achieve my goal without having to learn very deeply HDL/Verilog languages.

I have been looking at a XiLinx Spartan 6 board, which I believe would work for my project.

I agree that more recourses should be available for beginners (this field is constantly growing). Excluding the fact that it did not provide the best definition of a uC, this video is one of the good ones. It explains FPGA in a unique and intuitive way. https://youtu.be/7QOwyjFjqFQ

I also enjoyed playing around with Mojo boards. Those guys are doing wonderful job simplifying FPGA.

I have almost no college experience, know very little about how to use an FPGA, BUT I feel they're super easy to understand. You just have to learn binary, then learn how shift registers & memoryworks, as well as the physics of it so you can imagine the electrons moving around like you'd imagine parts in a machine. Then you learn about logic gates, I took a digital logic class and aced it because i looked it all up already, did learn you can't leave any pins floating though. And then from there, you watch a video on youtube calles 'how computers add numbers, in 10 easysteps' or something like that, repeat until it's understood how logic gates can perform mathmatical operations. I imagine knowing how a processor works might be helpful, pick one you might want to be an expert on and read the manual over and over until eachlittle part you manage to understand link together, they don't write those manuals for noobs so it'll take a while to understand. Then, just realize an fpga doesn't use a clock unless you add one, it's basically logic gates, and you should know from learning about logic gates how each gate can be translated into a mathmatical operator. I imagine programming an fpga is all about the syntax of connecting pins to formulas, and the compiler takes care of routing all logic blocks. Something like that. I see look up tables on fpga specs (designing a circuit that uses an fpga) and i imagine those allow saving resources by simplifying complex calculations that have few possibilities, in order to increase resource efficiency. Justmy guess. Zero college, I read textbooks on electricity and it confuses me, i haven't even taken precalculus and they expect me to know derivatines, differentiation, integration, symbols i don't even know how to look up, confusing stuff. My brother is taking a basic electricity class, while I'm designing a switched mode power supply. Iask him what they're teaching him because I'm curious about what I'd learn if I went to college. Dependant/independant voltage/current sources, nodal/grid analysis, I can't even understand the descriptions of this stuff really, college overly complicates things and pushes useless info. They have it worded in ways robots might understand well (correct & strictly worded syntax), but not people. I personally love fpgas despite not knowing how to use them, I think they're superior to processors in most applications. Our brains surpass computers, and work using neurons, which is like a cluster of logic blocks and connections. Kinda want to build an fpga based computer, it'd make game/os emulators so much better if you can just switch archiatecture like that.

Visit my blog for some fpga projects using Verilog/VHDL https://fpga4student.blogspot.com/

Behavioral or Structural? VHDL is my favorite, structural way is for hardware oriented people while behavioral is for software inclined. I had once an encounter with FPGA's, while i was designing a crypto block in vhdl. I preferred the structural way as it was closer to how components are physically interconnected in hardware. Hardware equivalent of an " conditional if statement" is the multiplexer. It is possible even to transform C/C++/C#/Vb like languages to pure VHDL, just by reading line by line c like code and jumping along goto line..

Well done. Thanks for the tutorial and the nice affordable board Sparkfun. For those who want to use FPGAs but don't want to learn/use Verilog/VHDL, I wrote a tutorial on Eliademy on how to code hardware with the Cx language: https://eliademy.com/catalog/basic-of-the-cx-language.html. I hope that it will be useful :-)

Just to share my blog where I talk about FPGA, microcontrollers, etc.. http://electro-logic.blogspot.it

OK - good news - I know this is a bit of an old blog, however I visited Xilinx in Ireland this week (March 2015) and they have developed a more used-friendly c-like interface which is designed to directly address the difficulty in using FPGAs - plus the lack of Verilog-trained engineers today.

Vivado HLS (uses C/C++) http://www.xilinx.com/products/design-tools/vivado/integration/esl-design.html SDAccel (uses OpenCL) http://www.xilinx.com/products/design-tools/sdx/sdaccel.html

definitely worth a look - should allow us more menial "c folk" to understand & use FPGAs.

http://fpga-tutorial.blogspot.com/2014/08/how-to-make-simple-program-in-fpga-vhdl.html :

this is the Simplest beginner hello world program explanation i have found, it is great and it would help you too. It made my quite many concepts clear, thatswhy i sharing it with u gets so that u guys too get some benefit from it..

As a new learner with a variety of programming background and a specialty of none :).

I think by just reading over the comments here I feel more confused than I think I should be. So if I'm right how others in the learning curve might feel I'd start with a super basic overview understanding that relates FPGAs to hobby electronic devices that are more commonly known.

Perhaps a learning model approach, like.

1) An FPGA; by all means, is an electronic breadboard and/or Generic PCB with selectable digital components; all inside a single chip.

2) Just as one wouldn't give a breadboard a list of instructions (like PC-programming) FPGA's don't take a sequential list of instructions. Instead they take a list of wiring connections (IE. netlists).

3) The concept of programming (Really better named "circuit designing") with an FPGA is often confused because EDA(Electronic design automation) tools use a PC-Programming like method to "Automatically-Create" the circuit (IE. the wiring connections and digital components) for you via abstraction methods; Verilog/VHDL; instead of having you pick and wire them together discretely.

a) And as a reflection of this model; Verilog/VHDL has different "levels" of abstraction one can circuit design at. Be it "Structural" for just stating which components to use and wire them together or "Behavioral" where one describes the intended circuits functionality and letting the EDA-Synthesis tool derive/determine how to wire together the circuit to produce the behavior you've described.

As somebody else said you need look no further than the Compact Rio from NI. Especially the low cost single-board RIO. It is dead easy to program using data-flow language (LabView) and the results are very powerful indeed. In fact a larger version of one of these is used at CERN on the Haldron collider! The one I have has 27 digital I/Os, 16 Analogue ADC inputs and 4 DAC outputs. If that doesn't light your candle then nothing will.

I gave a power point presentation last night to our robotics club about FPGAs. My big point was that it is not CPU vs FPGA, it is CPU + FPGA. I have been around the industry for a lot of years at www.srccomputers.com, I have worked with both Xilinx and Altera products. At the moment, my preference is Altera tools and verilog language. I have eval boards for both zynq7000 and Cyclone V ST right now and I love the SoC concept(these are similar offerings from both companies with ARM core plus programmable logic). I am laying out a PCB for hobby UAV that uses the Cyclone V ST. My tact is to show what the chip can do and what the cost is while developing ways to bring new people on board. After giving some examples of what is possible with these new SoC devices, the last bullet says "These are more complex to work with, but I can teach you." And now we come full circle to the article, there are a lot of concepts to learn for FPGA design. However, if a nice website builds on the curriculum then the core does not have to be completely hashed out with every new person. In addition, free FPGA classes can help with hands on learning.

The biggest problem with FPGAs is that NONE of the development software is Mac compatible. Even MPLAB X is Mac compatible these days, and yet, no FPGA manufacturer has seen fit to release a Mac compatible FPGA IDE.

My favorite acronym: VHDL V - Very High Speed Integrated Circuit H - Hardware D - Definition L - Language

I designed a 1Ghz logic analyzer with little more than an Altera FPGA and a serial port. Try that with your latest processor of the day, I don't care if you can program in CUDA and all that--FPGAs and processors are simply different tools for different jobs.

I designed an FPGA interface for the Raspberry Pi that uses direct writing of RPi GPIOs to achieve about a 16Megabyte/sec transfer time in C (don't use the BCM2835 library functions, that will limit you to around 2.5 Mbytes or less). This overcomes some of the limitations of the RPi by putting in a precisely timed thread scheduler, touch panel HW, 64 high current IOs, and an LVDS LCD panel interface in the FPGA. I'm working on an FPGA design that will take HDMI signals (10 samples per clock) to LVDS video (7 samples per clock) so that the RPi video can be displayed on the panel. None of these are practical things for a processor to do. It's a giant circuit sandbox, and the tools are getting better for makers. I'll brag a little and tell you I've been doing this since 1978, when I designed what could arguably be called the world's first FPGA in college (I still have all the project data, and I got an A+!) using rectangles to draw out transistors. Somebody said FPGAs are going away, I laughed, they are just now coming into their own...

Interesting that you were working with logic gates in 1978. It wasn't until really the mid-80's that FPGAs became commercially viable.

Is it by any chance that you knew (or knew of) the founders of Xilinx?

Texas Instruments was the company that paved the way--they were the true innovators. Signetics, Intel, AMD, Motorola all were nascent in the new IC business as well. Xilinx and Altera came along quite a bit later--it took a really long time for the configurable logic concept to be really workable.

I remember being so excited about the IC stuff anyone could buy for the first time--I was the only one in my high school of 3000 students doing anything in the IC electronics building area! When I was in college, IC logic was available in increasing complexity, but the notion of configurable logic didn't really exist as far as I knew--somehow I came up with the idea of reconfigurable logic (and hence my college IC project). At the time, I'm sure others had ideas about configurable logic as well--but it took a very long time for the notion to become remotely as good in practice (as fast, as cheap, as reliable) as wiring up existing ICs. I made an array of interconnected nand gates that were selectivly enabled by a small array of nand latches. Depending on how you wrote the nand latches, you could alter the input to output function of the IC. Pretty simple, but those were amazing times where you really could come up with ideas never been done before.

I knew several people that went on to be very successful in the tech business, but I had (and still don't) no interest in money-making or business--I've just always loved making things, so I maybe had opportunities but never took them. I've had a long and mostly comfortable career in logic and ASIC design, and that was good enough for me.

Getting back to the subject, FPGAs are the ultimate playground for somebody like me--processors can't touch the flexibility of what can be done here.

Woah, woah, woah, pump the brakes on mis-information. Texas Instruments was not a front leading company. Fairchild was the inventor of both discrete logic, and modern transitor, but most famously the integrated circuit, to which now everyone uses. TI sued them when they came out with the IC because TI was still using discrete circuity. Fairchild got super greedy, didn't pay the employees well, didn't innovate, and ultimately was hazardous to the mind. The lead designer (IC inventor) years later quit, and took the people he liked and started.......dat dat dah.....Intel. Which then created the first.....Microprocessor, and literally birthed the chip of the computing future. Welcome to real history, not some stupid, false corporate dogma.

Side Note: AMD invented the first 64-bit cpu during the 16-bit era

It sounds as if a microcontroller and FPGA are two diferent "launguages". So what needs to be done is to allow a novice in electrical enginering experience FPGA's before microcontrollers so they will easily become aclamated to it. This just as an American learning English and a Italian learning Italian because that is the "base" or "pedistal" that they grew and adapted from.

I think FPGAs are probably pretty scary to program, but then the only experience I have is that my high school electronics class did some stuff once with GALs which required boolean logic... ex. (A && B && !C) || (!A && C) || !B That was terrifying painful, I couldn't do it today even if I had the equipment although I think we did manage to make it work. Translating an idea into logic like that is a headache.

Hopefully it's nothing like that. I suspect one big barrier is that you need to know a lot about logic beforehand and have a good grasp of that and how to reduce ideas to logic.

http://trifdev.com/ makes a nice FPGA shield that's ready to use, out of the box.

I bought a DLP-FPGA USB Xilinx 3E-based FPGA Module with a very nice instruction manual from DLP Design and followed their tutorials. Worked for me. The folks at DLP were very good in answering all the questions I had. The board is fitted with an FTDI part with 2 logical USB ports which means that you can create some VHDL (I've never tried Verilog) to send logged data back to a host PC and also program the FPGA via a single USB cable. I also used the high speed version of the board which is fitted with 32Mbytes SDRAM. Learning how to create a memory controller block using the Xilinx ISE wizard took a lot of time but was well worth it. I eventually used the module, with the SDRAM acting as a frame buffer, in a battery-powered design to control the backplane of a custom-built LCD.

FPGAs are very different beasts to the MCU's on Arduino's and require a whole different mindset before you can start getting to grips with them. They are definitely not suitable for "whack-it-in" engineering prototype tasks like the Arduino is.

I'm in the midst of writing a presentation to explain the FPGA vs. MCU and why FPGAs should be used in certain situations. When I get this one, I'll get a video uploaded to Youtube for people to reference (when I have time).

I ordered a guzunty (Google it) which is a CPLD board which attaches to a Raspberry Pi. (Still waiting on shipping to deliver; ~$25 US including shipping from the UK)

CPLDs are like small FPGAs (hundreds of gates instead of millions) and are also programmed with VHDL or Verilog.

The Pi attachment means that I can do most stuff with Python on the Pi, and do appropriate things with VHDL on the CPLD. (e.g. I could write a stepper motor controller with ramp-up/ramp-down acceleration for the CPLD that responds to register values sent by the Pi and not have to worry that Linux is not a real-time OS that can't do the pulse timing properly.)

Sparkfun doesn't sell any CPLDs, but breakout boards are available from other vendors if you don't want to go the Guzunty Pi route.

CPLDs are nice, and a lot of the smaller FPGA companies (Lattice, Microsemi, etc) still make CPLDs because it's a market they do really well in.

The biggest difference between CPLDs and FPGAs (besides their size) is their on-chip features. FPGAs have BRAMs/DSPs/ADC/Gigabit Transceivers. CPLDs are more LUTs, flip-flops, I/O, and maybe some more little nick-nack features.

I have never tried to 'program' an FPGA, but I did just learn how to program a PLC in ladder logic. I have programmed many things in basic like languages for years as well as programmed ucontrollers for several projects. The shift to programming a machine in ladder logic was fairly big. You can have several parallel process running and it 'seemed' much more difficult to "control" what was going on. As I learned more and more, I found that it was much easier to add certain types of functionality with ladder logic since I knew that bit of 'code' would alwys run regardless of what else was going on in the 'program'

Bottom line is that ladder logic (similar to what I understand about VHDL, etc.) IS a pictorial view of hardware that used to exist as switches and relays. I'm not sure of the hardware used inside a PLC, but the implementation of programming it is very different from traditional line by line code. Some may call it BAD, but I just call it 'different'.

Ctaylor, your opening statement crack me up, "Like most engineers, I was first introduced to FPGAs in college". I finished college in1980, sadly I missed the class on FPGA's. :-) "Like most NEW engineers", maybe?

I agree that a lot of people try to get into FPGAs thinking that they are just supped up microcontrollers and that is simply not the case. They are far closer to a cpld (complex programmable logic device) than they are to a microcontroller.

I find that the way I solve problems is much more in line with VHDL than with a traditional procedural language. I recently wrote a pong game on an FPGA for school and I found that while it was harder than doing it in Python or something like that, I liked how I could solve the problem more. On a processor I have to worry about when I do each thing and if I have enough clock cycles to get through the gameplay math so I can update the visuals. On an FPGA I can solve each issue independently. I can make some signals that represent the players and then write a different bit of code that continually displays them and another that moves them. They all get synthisized to separate logic circuits that inherently run all the time. Think of it like building a circuit with code.

Sounds a lot like a modern day PLC. It used to be switches and relays in a cabinet, now it's a box with ladder logic software to create the same thing.

When I first started using FPGA's in 1992, using VHDL was an expensive alternative. Using schematic capture was the way I originally chose to enter in designs. Xilinx had a program that would translate the netlists and then would route the fpga from that. It was kind of funny, back then it took close to 45 minutes to route a 3,000 gate fpga. (XC4003). I used this method until about 2002, when I switched to Verilog. For me, the transition was fairly easy. I thought of the verilog more as a verbal description of a schematic rather than a programming language. I also do a lot of programming in C/C++, but I have always been able to keep my mindset for these two activities separate.

By the way, is Sparkfun going to carry the Mojo? I at the very least would find this a very handy board.

I'd be curious to know more about the relationship between FPGAs and ASICs. I mean, I know what they are and such, but never having designed either I'm curious how they relate.

Specifically, ASICs have been getting a lot of press in the Bitcoin world of late, and I've heard that one approach to designing an ASIC is first to implement the design in an FPGA and then migrate that same design to the ASIC. The idea that the two technologies would use the same description language is something I had not considered. It seems plausible, and if this is really the way it's done then it makes learning FPGA design a lot more attractive to me personally.

Plus that Mojo board is dead sexy, so there's that.

You can develop for an FPGA and then use a synthesis tool for targeting an ASIC implementation. Fundamentally, it's the same design flow, with more step for ASIC.

My only comment is that you're not going to use Xilinx or Altera's tool chains to develop for ASIC unless you really need to. Most ASIC designers will use Mentor Graphics, Synopsys, or someone else.

Sure, but the front-end flow in basically the same.

I think one of the most important aspect is to have good scripts which perform automaticaly the compilation/synthesis/P&R flow. There are a lot of tasks for converting the RTL in a bitstream ready to be send to the FPGA, and it's can be hard to achieve when you start learning. Further works on tool chain have to be done, but what ? :-) An abstraction layer ? A low level component library (counter, ALU, ...) ?

Not sure what you're getting at. All FPGA tool chains have gained quite a few features to ease the burdens that once were there 10+ years ago.

Plus, since TCL has become a standard scripting language for the industry (thank you Synopsys), you can push your entire design through bitstream with a few quick commands.

And as far as the verilog/vhdl side there are free tools out there that run on linux and windows that any one can dive in and start using. Understanding that you dont need to see an actual led light (or actual smoke come out of the part) to learn something, you can do huge, complicated designs, just like the professionals do it. That last step of taking your design to silicon has a learning curve sure, but you can divide those learning experiences up on these natural boundaries. I have been working in the silicon industry for years and along with the pay-for tools we use the free tools quite a bit (verilator and icarus and gtkwave), as we do our software development on chip/board simulators so that when silicon arrives we are mostly ready to go.

Very true! If you just to learn the basics of digital logic and HDL, open source is the way to go. My only concern with open source for HDL is the lack of supportability. For example, someone is learning HDL and they see they can do a neat little trick to do what they want. When they push it through the open source compilers, it doesn't work. It is very hard to keep compilers on top of the language standards out there, and I commend anyone on the open source tools to keep it up.

I dont see the logic is all that hard, counting from 0 to 1, AND, OR, NOT covers about 99% of it. The tools are proprietary and generally not at the quality of the equivalent software toolchains. That is a major barrier to entry for software folks to transfer over. xilinx or someone did a study back when languages were taking over schematic capture, and software folks were preferred over electrical engineers for doing logic design because all you had to teach them was the concept of things happening in parallel, they already knew programming languages and compilers and other similar tools. Today of course that is different. If someone were brave enough to make a programmable device where the guts of it, were open such that the open source community could make the compiler and place and route, etc, you would eventually see tools that dont suck, run everywhere, and overall better experience.

The real reasong for adding a coment though is that if you go over and look at what xmos.com has, which wants to be competition for cpld and small fpgas, that may or may not be a good stepping stone for software folks that want to do more than just bit bang some gpios. not the same languages, sure, C and asm instead of verilog and vhdl, not the same experience necessarily as pure logic, but the experience of understanding the signals you want to send or receive, using a simulator that provides waveforms that you use gtkwave or something with, that is all the same experience as with cplds and fpgas.

I will have to poke a hole in open sourcing FPGAs.

With these three thoughts, could someone open source it and let people make it? Sure, but it'll take a lot of capital and a lot of work to get something out there where they don't see a dime! I will fully support anyone who wants to do this, just know the obstacles.

The best implementation I saw of an open source FPGA was multiple chips. One board of this design was one SLICE of an FPGA. As for the software programmers need not to learn the hardware. I have to say, they need to learn the guts in order to get the solution they want.

I teach digital electronics (PLTW for those of you in the know) in a high school and FPGA boards comprise part of the course. We don't get into the HDL much at all, which is auto-generated from their logic designs in Multisim. I have them look at the VHDL a little, however, which helps them to appreciate what brings their simulated circuits to life on actual hardware. I think this is a great entry into this great world of FPGA development, and I believe NI has a 30-day trial version of Multisim as well.

Good on you for teaching a value set of skills to a device that gets no attention until late in everyone's college career.

May I suggest a way to help introduce HDL into learning?

Relate HDL code to the digital logic circuits they're building. With this, you can apply syntax of HDL as an afterthought and the kids should understand the do's and dont's of HDL. If you can say, "See this counter we built with logic devices? Let's code it using HDL for an FPGA and see if the results we get are the same."

I'm in the same boat as many... FPGAs are all over, but I don't really fully understand them. Ironically, I use them constantly! And, my case is probably a good example of how they are becoming pervasive while removing the difficulties in implementing them...

You see, I use National Instruments Compact RIO controllers for specific control applications, as very high speed replacements to old PLC technology. The interesting thing in the cRIO is that it has an FPGA backplane built into the unit, which you can directly access and leverage when programming in LabVIEW. So, I can take my LabVIEW program, pick specific routines or code that would benefit from running at FPGA speeds (and experiencing true parallelism for execution), and just make a few conscious tweaks or planned adjustments while creating the code. When I deploy my code into the controller, it handles all of the direct programming and configuration of the FPGA, and I don't have to think about it. It's like some kind of cyber-magic... And the speed bump in the processor that I get by pulling those functions out of the CPU and right into the FPGA can be amazing!

I think, if more and more programming and development platforms can make it easy and seamless to integrated FPGAs, they will continue to grow and become even more pervasive. Sometimes, the trick is to just make tools to let people use them, without having to understand them. I mean, how many people actually know how a computer CPU works, vs how many people use one every day? Or how many circuit or board designers know the specifics of how every chip they are using works, as opposed to just knowing the functionality and general specs? It may be that a little less hardcore understanding of the intricacies of FPGA and a little more "ignorance is bliss" could make many lives so much easier... Heh

Valid points.

But the thing is, FPGAs are used in extremely complex designs, which requires a lot of very specific knowledge of EE concepts. The thing that the Mojo and Papilio do is get a cheap DIY system into the hands of people willing and wanting to learn.

As stated in one of my comments, the best way to learn the FPGA is to learn the building blocks (AND/OR/NOR/NAND/XOR/etc. gates, Flip-flops, LUTs, etc.). After you understand the basics, if you can translate those to HDL. This way you have a 1:1 mapping on the code. From here, there are two things you can do: 1) Learn the features of the FPGA (very advanced), or 2) Learn the tool chains used to program the chips. I would recommend 2. This gives you the best ability to understand how to fully utilize the FPGA.

I will be more than happy to do livestream on demand for anyone who wants to learn.

Yep, FGPA's are hard. You are working at the absolute lowest level of computing hardware possible. It is equivalent to connecting 74LSxx parts with jumper wires on a bread board. Add to that the fact that timing matters immensely and you have a complex, and difficult to learn environment. Learn async digital logic. Learn synchronous digital logic. Work your way up the ladder. When you understand why most FOR loops in Verilog cannot be synthesized, then start more complex stuff. Sometimes concepts are hard for a reason and not everybody gets them. That's ok. Sometimes, something that looks cool takes a lot of work, maybe more work than you want to put into it. That's ok too. In reality, if you are looking for high level programming constructs to put down large blocks of functionality on an FPGA, you don't don't need and FPGA. You need a microcontroller.

I agree, specific concepts of FPGAs are very complex, and hard to understand, but once you know the basics and how to apply them to the concepts, it makes things easier.

As a comment to the for loop. HDL supports for loops, but not the way you think. They are usually used in "generate blocks" which basically means, the for loop is used to generate blocks of hardware to use in the design. So you want to generate 50 flip-flops? Write an HDL for loop.

I worked with some of the Altera boards in school and used them successfully in several projects. One of the websites that i still have bookmarked from that time is, http://www.asic-world.com/verilog/index.html , It has sometutorials and examples. I have not explored the open source community to see if it has more/better help, but this is what helped me.

I am a software engineer, with a master in CS. I am dabbling in electronics as a hobby.

Understanding the "non-procedural" part of programming FPGAs is not all that tricky - if you have used languages such as Haskell or Prolog and encountered declarative programming during the computer science education, you would be right at home with Verilog or VHDL. Unfortunately a lot of software engineers didn't have these things at the university, not to mention people who learn a bit of Javascript or PHP and think themselves programmers ...

I think the worst thing someone can do trying to teach/learn programmable logic to software people is to: a) say it is programming b) say that Verilog is similar to C

Neither is true in the way software engineers understand these terms and it sets the stage up for a major problem with due to not speaking the same language.

It is similar thing like programmable voltage references - it took me a while to realize that "programming" them actually means soldering one or two resistors! Software engineer thinks programming = source code/compilers/execution flow and the confusion happens right there before even getting to the substance of the discussion.

Jan

I agree with everything you said, and this is a known area that FPGA companies are going to solve. Xilinx has solved with Vivado HLS (High-Level Synthesis), and Altera has their version as well.

Why really learn HDL when you can have a tool that translate it for you?

I wouldn't really call their solution as "solving" the problem of HLS. Vivado is useful, but there is a lot of research and improvements necessary before it can really be considered "solving" HLS. Many, many companies have tried tackling the problem of HLS. It is not a simple one.

I recommend looking at the Youtube videos of Xilinx customers using it and how easy it is to use.

I've used it on creating a SHA-256 implementation and it worked well. So, don't knock it 'til you try it.

What makes you think I havent tried it? I've worked in HLS companies for the past 5 years, and am well aware of the various solutions on the market, as well as the history of companies that didn't make it. HLS is no where near "solved", and especially not by Vivado. The biggest strength of Vivado's HLS is integration with backend synthesis and the rest of the toolchain. Yah, thats a huge deal, and it will probably be the HLS of choice for plenty of people for that reason. But its not really an amazing HLS solution, its a decent one that cleanly integrates into the tool chain that most people use.

Let me know when these DIY people get serious and start using MicroSemi SmartFusion FPGAs that don't have firm errors. (Oops were we not supposed to mention the limitation of SRAM based FPGAs)

I'm not sure anyone doing DIY cares about SEU recovery. If MicroSemi were serious about getting hacker attention, they'd release an eval kit for a small fraction of the $299 their entry level SmartFusion2 one is going for. The unrestricted free tools is a nice touch though.

They will the first time the project calculates 1+1 and get's 9

This was such a silly comment I had to sign up just to reply.

I work for Microsemi. I design integrated circuits. I use XILINX parts for emulation and a lot of other tasks in-house. (Microsemi doesn't make anything nearly as big as the largest Xilinx parts.) Am I a DIY hobbyist? Would a hobbyist spend over $100K at a time to build multiple emulator systems?

We run things in validation for weeks at a time. Are SEUs real? Sure. Do they render parts unusable? Why don't you ask people who run programs on computers? You know, in that stuff called RAM?

So for starters, my $.02 is to ask a simple question. Why would I use an FPGA? I have only passing familiarity with the term and some of the devices I've heard have FPGAs in them, but before I'd even pick up a book (or wiki link) to figure anything else out about them, I'd kind of what to know what type of problems they solve at a conceptual level. Again, just my $.02.

Scrubb, very good question!

FPGAs are really in their own world. You know the telecommunications network people love and enjoy? Routers/switches/backhaul stations on cell towers, all use FPGAs. Cool huh? They are also used in video displays. The reason is, it's easier to build a VGA/HDMI/DVI system in an FPGA than ASIC (plus cheaper too). Your external harddrives for backups? They're there too (not so much anymore).

GPU companies use FPGAs to model their GPU processes in order to optimize their designs. Same goes with the CPU companies...

As an "Oh My Fact," Google uses FPGAs to process the Google Street View images. By utilizing their protobufs they can decode the data fast and give it to you fast. Pretty slick, huh?

I can go one, but then I'd end up ranting...

So basically... they are like microcontrollers designed to do one thing fast and reliably, but not as singular as ASICs?

Not really, they are different than microcontrollers.

It's hard to explain well, but think of an FPGA as having programmable logic gates which can be configured in different ways to fit its task. It actually does this by converting your logic into look up tables. Most tasks run almost in parallel, with gate delays, and anything procedural must be created and written a specific way to use clocks and edges and blocking calls to force things to run line by line.

A microprocessor has a core that is expecting commands and it acts on those. So you program a huge list of commands, your program, and the micro steps through line by line evaluating and doing actions on that code.

They are different enough and both have their specific purposes so much so that they make FPGA's that have micros on board, either soft cores, a virtual micro made from gates, or even some hard cores. I think Xilinx makes a core with an ARM on board.

I prefer micros for my day to day tasks, where FPGA's really shine for me is things that are memory intensives such as video and picture work.

Exactly. Plus you can always reprogram them to do something different.

To give a good example, look at the autonomous vehicle competition. You have a microcontroller handle GPS, motor control, sensors, etc. That's really fast (16MHz or about). Doing interrupts on the MCU does take away from the speed (a bit), but it's generally fast.

Now if you did the same thing on an FPGA, you can parallelize the process. So instead of all the data going to one system for handling, you can have GPS data work with the sensors and send control signals to the motors as necessary. I've been dabbling when I can on my zedboard. It's an ARM processor + FPGA. I really wanted to do AVC with it. ARM is loaded with linux that is running a constant python/perl/C/C++ script/program to gather GPS data, display it to the onboard OLED, and send control signal commands to the FPGA (which would do just PWM). I knew this was too easy, so, why not process digital video from a GoPro and broadcast it via an ad-hoc network?

Pretty slick, huh?

Another example is if you want to do some audio filtering. Most people would use DSPs directly to do this, but those are expensive. With an FPGA, you can take in the audio (ADC) do your filtering and output it to a DAC. It reminds me of a design someone did. They built an Arduino inside a Spartan3 to control LEDs

One way to think about it is to consider where FPGAs came from. They basically started with the GAL technology where you could put a bunch of gates on a single chip and then program it for the functionality you needed. I used a 22V10 that way on a crowded board that needed a bunch of simple "and" and "or" functionality to reconfigure an address bus based on a logic input. I really liked that little chip, it replaced a half-dozen other chips that wouldn't have fit. Even better, I could re-program it when our needs changed - much easier than unsoldering a bunch of chips! That technology led to PLD, then CPLD, then FPGA. They all have the same basic mindset - a (huge) toolbox of parts you can connect together programmatically to implement pretty much whatever logic function you want. One big use for them is when you need to chew through a lot of data fast. A microprocessor running a program can only operate on a few pieces of data at a time, one after another. An FPGA can "drink from the firehose" and keep up. It's like the speed of a dedicated hardware implementation, combined with the small size and flexibility of a CPU. Happily, even though Lattice discontinued their original 22V10, the Atmel one I referenced above is still in production - and available in a breadboard-friendly DIP package! At less than two and a half bucks (quantity 1 from Digikey, ATF22V10C-15PU-ND), they're affordable enough to just get one and play with it.

What device do you use to program Atmel GALs?

I have been dealing with the big expensive mess left by an embedded use of 2 FPGA's. For most uses the best thing to do is dedicate a micro-processor to the job. This is in fact what ARM has done with its multicore ARM chips. You dedicate a job to a processor matched to the job and do only high level decisions on the basic machine. The concept of FPGA is for the most part obsolete. The support from the Manufacturers SUCKS and BLOWS CHUNKS. The FPGA market has essentially topped out and is set for a long and miserable death.

The only exception to FPGA being a rotten idea is where you need a lot of very high speed computing where many complex computations need to be done to drive a process in single clock cycles. The hot shot processors of today such as ARM processers have pretty well doomed even this area to death. Imagine a FPGA able to compete against a very highly parallel processor. It doesn't work.

The reason computing is so strange is few people consider that there can be a whole lot of processes going on in an FPGA at the same time. It sort of is a multi-core processor. I know that there are more details but it isn't really any different. With the birth of the Quad Core and above ARM chips (Think Parallella.org) this advantage is gone. You always will have to deal with concurency in any machine where more than one process is going on at a time. This works like interrupts.

This seems maybe just a wee bit calculated to provoke a flamewar.

Everybody stay chill.

No, you are missing the point. The FPGA is hardware and as such can outperform a precessor with speeds of up to 100 times faster (depending on the application fo course). It can work concurrently of course too. The support and documentation I agree is terrible and that is why you need a Compact Rio.

I am sorry to say that I don't think the FPGA industry will be ending any time soon. Being an FPGA designer for a living, I have seen how vitally integrated they are into industries that will continue to use them for years to come.

I hope you get a chance to work with FPGAs in a better setting some time, they truly are amazingly powerful pieces of hardware. Think of them as 100,000+ core CPUs running Finite State Machines rather than Machine Code.

Perhaps you want to do high-speed digital data acquisition (100MHz+), or DSP filtering of RF signals, or maybe extracting audio from HDMI streams, or how about processing 1080p video streams in real time, or processing raw video from cameras for embedded vision, or controlling 64 servos in real time, or filter a 3Mb/s S/PDIF stream.

The low latency (measured in nanoseconds) and ease of interfacing allow FPGAs to do things that can not be done with CPUs, even when running a real time OS.

The issue you describe is very odd, which makes me wonder if it was someone who wanted to use an FPGA without the proper research.

As for your comments on that it's a failing area due to quadcores and multi-cored ASICs/ASSPs. You are sadly wrong. Why would I want to spend 5 milllion dollars on tapeout of an ASIC/ASSP to do video integration into a system? Why would I limit my system to just running that process? Let's look at the Internet traffic of today. That's a lot of processing. Pushing that data through a pipeline (like you're suggesting in a CPU system), you're wasting a minimum of 4 cycles per packet (at best). With an FPGA, you can process that data just as fast, and have no overhead. Not only that, but you can parallelize the data stream! Xilinx's 28G transceivers are used in parallel for backhaul. So if you have 16x28G processing packets (16 in parallel), you're out performing a multi-cored system by gigaflops.

I think one of the biggest problems is a lack of good free tooling and allowances for "emulation". I mean, waveforms are useful, but they are part of that learning curve I think. For good free tooling, there just isn't much there. You can cobble together an environment using ghdl, make, and gtkwave, but it's not easy. Another big complaint is that VHDL and Verilog are both such terrible languages. Verilog lets you do crazy procedural stuff that's not synthesizable(but not obvious) and VHDL is so verbose and inconsistent it's painful. There are some attempts to fix this, such as pyhdl that translates to VHDL, but it's not perfect, and there is a huge lack of documentation for newbies if you go that route. Also, there is absolutely no free tool I've seen for debugging any HDL code, especially when it works in simulation, but not in hardware. I think a lot more has to happen than writing good documentation to make FPGAs easy to use. It boils down to better tooling and letting newbies be able to use simpler tools than a waveform viewer and testbench code

FPGA design software (for Xilinx and Altera mostly) they have free versions of their software. The Mojo and Papilios use parts that are in the ISE WebPACK version, so you don't need to buy anything to program the chips!

I disagree with the VHDL and Verilog being terrible languages. Not to insult you, but it tells me that you did not learn them well, or understand what they do. The FPGA and ASIC community uses these languages very heavily (especially Verilog). If you want to take existing code and convert it, Xilinx has Vivado HLS which will take C/C++/SystemC code and convert it to Verilog/VHDL for you.

For debugging code, Altera and Xilinx both offer free software that lets you do this. The open source area of debugging HDL is not there (nor should it be), because once you have HDL written, what do you do with it? It makes no sense to write HDL and not push it to a chip.

I disagree that it comes down to simpler tools. It comes down to the teaching. FPGAs are always going to be complicated, and will get more-so as time goes on. it's a matter of teaching it the right way.

Not to insult you, but if you think that VHDL and Verilog are good languages, then you don't know much about language design. They are terrible. The number of times I've tracked down a verilog bug to "oops accidentally misspelled a wire's name, why didn't I get warned about this instead of the synthesis just silently assuming I wanted a completely new wire?" really makes me mad. The verbosity of VHDL is practically legendary. I won't disagree that they aren't used heavily in the real world, but that is hardly evidence that they are good languages.

I never said that they are good languages (nor do I think they are). But then again, I have used them enough that I know their flaws and workarounds. Plus, the quick code analysis of the tools has been able to catch things like this.

Luckily, compilers now are able to catch this (if you don't synthesize the design, and just elaborate it). So instead of wasting hours, you waste minutes.

As my grandfather always liked to say, "A good carpenter never blames his tools."

One of the big confusions that people have to keep in mind about VHDL is that unlike most "programming languages", it's not really designed for computers to parse. It's more designed as a description language for documentation (for humans, or at least engineers) to read.

At some point, computers got powerful enough for a subset of VHDL to be synthesizable, but at the core - the verbosity comes from the fact that humans and not computers were the original parsers of VHDL.

I've worked closely with an FPGA engineer for abotu 12 years, as well as being his boss for several. From my perspective, it is true, you can do some VERY tricky stuff on FPGA's. This has been for a high end video grabber with different compression codec onboard. We max the capacity, and have several different builds for different functionality that we load on the fly. Over the years, we have had many issues with tools, we get a new release to fix one issue, discover another. Due to the nature of the product, we have to do a lot of non automated testing, and we have often found one build that works and other builds that don't due to, and this may not be correct, automated gate routing that gives unequal length gate routes to memory, causing timing issues when accessing SRAM. Of course, since I'm not the engineer, I don't have a reliable estimation of how much is engineer and how much is tools :) Either way, we couldn't do what we do without them, but boy are they a man power hog !

Totally understand. It's tough to make a tool that is always worked on reliable release-to-release and have it not break customer designs. Most customers will find a good build and use it until they have to get a new chip for their next product.

Luckily (I don't know about Altera), but I know Xilinx has done a lot to create good documentation and teaching for customers/users.

Well, I've made some small projects and my workflow was quite painful. Using ghdl/gtkwave for development and then when I need to actually "deploy" to the FPGA, copying everything into ISE (because using only ISE webpack itself, was even more painful). And my argument is that VHDL/Verilog sucks as languages in general. I don't want to try to describe hardware in C++, I just hate how verbose and old-fashioned they seem to be. But yea, I'm no expert, but for my own personal learning curve, VHDL and Verilog (I chose VHDL, but tried Verilog a bit as well) about 25% of my learning difficulties. 25% being lack of good tooling. 50% being the fundamental paradigm shift that you're /describing/ hardware, not programming hardware.

I totally get where you're coming from.

I think if you had a good teacher or way to apply what FPGAs can do, I think you'd enjoy it.

As I stated down below, I'm in the #sparkfun IRC channel all the time, and I'm helping out someone with FPGA development. I do livestreams on demand, so if you want to, I can teach you some of the tools of ISE WebPACK and HDL coding (mostly in Verilog).