Last month (March 2014), I was fortunate to travel to New York City to participate in a working group at Columbia University's Tow (rhymes with "plow" not "stow")(and what a preposterous language English is) Center for Digital Journalism about the ethics and legality of drones and sensors in journalism. I was there to provide an opinion on the implications of FCC rules for journalists seeking to use drones or sensors as a part of reporting.

While there, I met Deirdre Sullivan, who is a lawyer on staff at the New York TImes. Over dinner, she told me that the Times has an R&D group that does a lot of interesting hacking, and that they use a lot of SparkFun stuff, and would I like to meet them?

Some of the lab's employees, L to R: Noah Feehan, Maker; Michael Dewar, Data Scientist; Alexis Lloyd, Creative Director

Of course, I jumped at the opportunity. Here are some pictures of the projects they're working on (or have been working on lately).

This smart mirror project, dubbed "Reveal", delivers headlines, tracks your medicines and integrates your Fitbit data with your morning grooming rituals. It incorporates a Kinect, RFID and environmental sensors to give you more information that you can possibly digest before you've had some coffee.

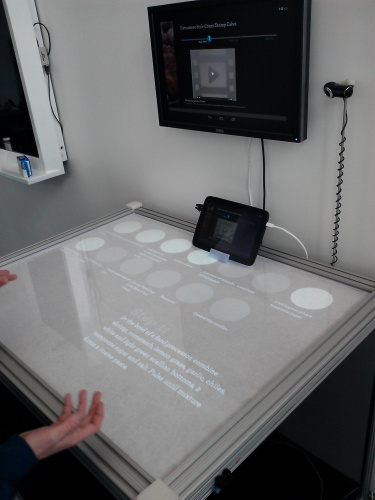

"Julia" is an interactive food preparation surface that delivers recipes from the Times to your kitchen. Not only does she walk you through the recipe, step by step, she also provides visible locations for all the ingredients (the circles in the picture above) and highlights which ones should be used for the current step. A gesture recognition sensor allows her controls and touchscreen to remain unsullied during even the messiest portions of meal prep.

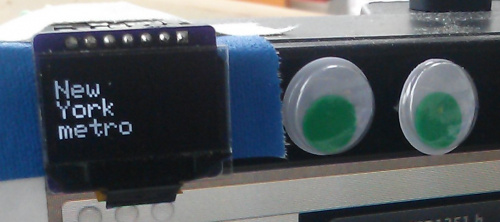

In the "not sure how I feel about this" arena, each member of the group has an OLED display on their monitor that shows a '"stream of the topics a group has browsed" or a representation of the group's information diet', according to Noah. The project, called "Curriculum", anonymizes the data and does not monitor https traffic, and the traffic is strictly opt-in. Noah tells me that it's a great idea stimulator, but it totally wrecked any chance of surprise regarding intra-office Christmas presents.

This little pendant, called "Blush", is a super-portable front-end for Curriculum. It's constantly feeding your conversation via BLE to a speech recognition program, which compares your topics of discussion with your Curriculum feed and lights up when you hit on a topic in conversation that correlates with your recent information diet.

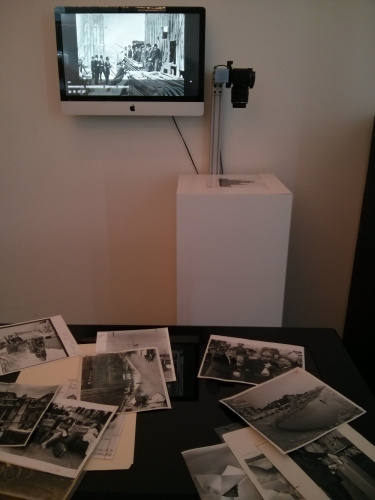

The Times maintains a huge archive of old pictures. Many of these pictures are absolutely priceless, and haven't been digitized yet. A simple one-bit scan of most (all?) issues of the Times exists, but the image quality in those scans is terrible. The R&D group is working on a device, called Lazarus, to automate this process. They can enter publication date information for a picture, place it on the surface and capture an image. The program then retrieves the existing digital copy, finds by image processing the likely matches for the image in question, then swaps the new image into the archive for the older, low res copy.

Finally, my favorite. The parasite-looking thing on Noah's neck (dubbed "Locus") connects with others of its kind via embedded Bluetooth and distributed base stations, creating a resilient distributed mesh network, and it warms up when you are close to a place a friend has recently been close to, with the idea being that it reminds you that your share a space, if not a time, with the people you care about. What I like most about it has nothing to do with the intended use, though; Noah tells me that when it warms up it feels exactly like shame.

I was excited and honored to pay a visit to the New York Times building, and I'm glad that our work is in some way being incorporated into the work done by that amazing and prestigious organization.

If you want more information about what the NYT R&D Group is doing, you should check out their site, visit their blog, or follow them on Twitter! Thanks for taking the time to show me around, guys!

That's pretty sweet. Working at a place like that would be a dream job.