An adaptive filter is designed to optimize non-linear, time-varying filters by updating its weight vector based on minimizing the error that is fed back into the system. Designing a filter that can adapt with time lends itself to useful characteristics such as training them to perform tasks without the designer having any prior knowledge of the system for which the filter is designed.

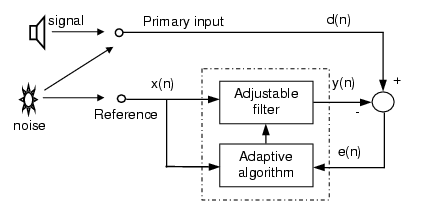

Photo courtesy of www.dspalgorithms.com

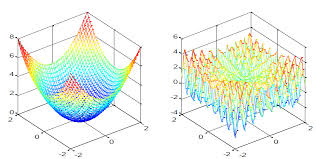

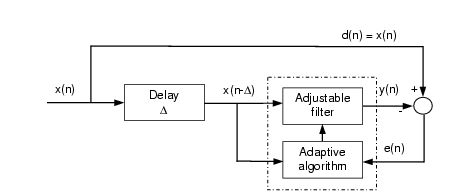

Adaptive interference canceling can be modeled as a feedback control system (diagram above) where d(n) is the desired output, y(n) is the output of the filter, and e(n) is the error or difference between the signals d(n) and y(n) at the summing junction. The error gets fed to the adaptive algorithm after each iteration. The primary input is the desired signal. The reference input contains the noise that is also contained in the primary input. The goal of the system is to get y(n), the output of the filter equal to the desired signal d(n) – the gradient at a point on the performance surface ideally equals zero. The performance surface is the plot of the expected value of the instantaneous squared error (error at each sample). The weight vector that can be calculated from the squared error is always a quadratic function. The LMS (Least-Mean Square) algorithm avoids the squaring by estimating the gradient without perturbation of the weight vector. From a single data point, the LMS algorithm can calculate the "steepest descent" along the performance surface by starting out with a wild guess and, in a way, will ask itself, "Which way to low-flat land?" Since each iteration is based on an estimate of the gradient, there is a fair amount of noise gained by the system, but it's attenuated with time and attenuated even further when the weight vector is kept from updating every iteration.

Example of a performance surface. Courtesy of http://www.ijcaonline.org/research/volume132/number10/tripathi-2015-ijca-907639.pdf

This is similar to how feedback in conference calls is eliminated. The feedback is the noise that can be heard on both ends but is definitely stronger on the producing side. The side with the "weaker" feedback is the desired output.

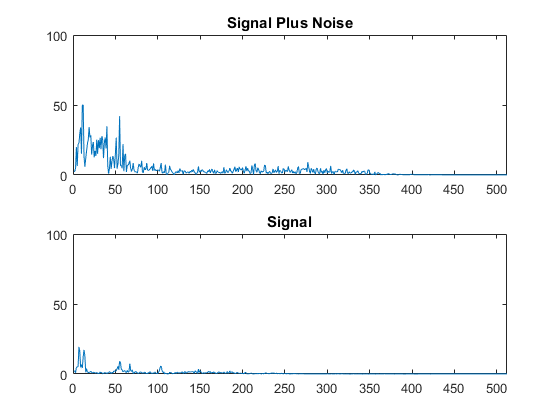

Below is an example project I created in Matlab (code provided in GitHub link below). I read a passage of a book over the sound of what could be a couple lawnmowers. The first half is the raw playback of the recording. Toward the end it is nearly impossible to distinguish my voice over the noise. The second audio recording that is played is the live, adaptively-filtered version of the original recording.

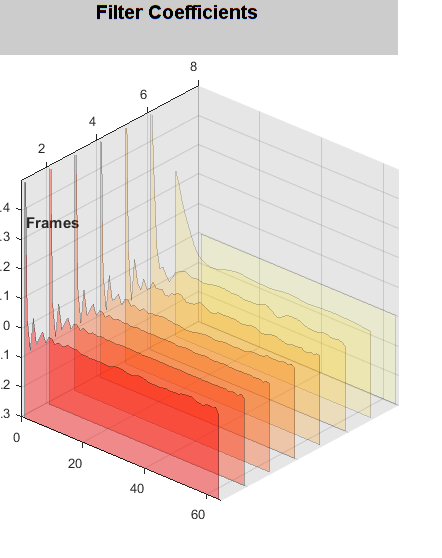

You can see from the waterfall plot above that once the algorithm has hammered out the range of the lowest possible error e(n), the noise becomes nearly non-existent. From the first 10 samples you can see the algorithm found the path of steepest descent. Then went up a bit and back down a bit until it figured out where the minimum error occurred. These filters usually start out with a high error and then settle around close to zero. In the last run you can see the error didn't start out so high and settled quicker than the previous runs.

Here you are looking at the FFT of the desired output d(n) and the FFT of the error e(n). You can see how quickly the noise is canceled, and how well. The last 250-300 samples hardly change at all.

Here is the link to the GitHub page where I have pushed this program.

Food for thought: Given the control diagram below, the adaptive algorithm could be changed to become an adaptive predictor. With one input (could be something like the whole history of winning Lotto numbers) and a delay you are on your way to making your own prediction machine.

Photo courtesy of www.dspalgorithms.com

I may or may not already be working on this. For anyone interested in learning more and seeing the math, I highly recommend "Adaptive Signal Processing" by Bernard Widrow and Samual D. Stearns.

Very cool! I think it would help if you just explicitly wrote the update rules for an LMS filter, as this is one of the few times the math is simpler than the English (at least for me). If we just start with some initial guess at the filter H, we get our guess y_pred = H * x. All we do is:

err = y - y_pred H(n+1) = H(n) + mu * err * x

Where mu is just some scaling factor so we don't overshoot dramatically or something. Written that way, a scary concept like "adaptive filtering" seems really simple!

I thought the same thing actually! But I wasn't sure if it was because I already knew the math or not. I'll update it during lunch. There's very few definitions needed to understand the equations as well. Thanks for the tip and confidence!

Thank you for developing an adaptive filter! I am interested in the second part of your work with the filter predictor. Is your project ready? Can I look at your work on the predictive filter? Thank you.

Corresponding to Simulink, is the d(n) going to be the input on the block diagram labeled "Desired" and the Reference x(n) going to be the "Input" on the block diagram? Also to implement this in Simulink do you need to have a loop of some kind to keep on looping until you get an error of about zero to send the signal through?

It would be so awesome to have Bluetooth noise canceling modules to install onto my Audio Technicas.

Nice intro, but as an embedded maker MatLab et al dont thrill me. I used to work at a large industrial R&D center where LOTS of work was done with MatLab. The PI's did great work and wrote great papers but in the end the customers (industry/govt) complained that they had to rebuild entirely based on published papers because the MatLab code would not run on the portable systems they built.

It would be VERY cool to see an ANC application built on something like the Teensy Audio board or perhaps an FPGA device (possibly combined with teensy or other general purpose front end).

I think that I missed something important at the beginning. It appears that we're using an adaptive filter to make y(n) the most like d(n). But, if you already have the signal that you want (ie, d(n)), what is the point of this filtering approach? If you want d(n), you already have d(n). Weren't you done before you even started?

Clearly, I'm missing something fundamental. Can anyone clear up my misunderstanding?

I could have set this this up better. An example: I'm conducting an interview at the beach but when I play back the recording the sounds of waves crashing nearby are are too distracting. If I had another recording nearby of the waves (both recordings starting and stopping at the same time) the program I wrote would eliminate the sounds of the ocean waves. The interview recording is chosen to be the Primary Signal. And the additional recording is the reference. In the block diagram it shows the noise being injected into both the primary and reference signals. The desired signal d(n) is a noisy version of what you want. Does that make sense?

Thanks for the reply. Swapping around the point of view is helpful. You're starting with the interference signal and with the mixture. Using this adaptive filter, you can remove the interference from the mixture to reveal the desired signal. Much better. Totally got it. Thanks!

Oh, and because I forgot to mention it, I'm a big fan of anyone who writes about signal processing. Thanks for taking on this topic!

Thank you. This is something I do as a hobby. I'm also writing a predictive filter which I will push to the same repo and I've written some other filtering tools as well including SER and RLS adaptive filters. I do like being able to hear the processing take place and the visuals also help. I'm hoping to learn enough to move onto Neural Network modeling to do image processing. Love Matlab.

Yeah, I love Matlab, too...when someone else pays for it. In my case, I have it at work. But at home, I don't want to spend $2125 for a license (plus extra for individual toolboxes like the Signal Processing toolbox). Sure, if you're a student, you can get it for $49-$99, but I don't meet any of their requirements:

"Student software is for use by students on student-owned hardware to meet course requirements and perform academic research at degree-granting institutions only. It is not available for government, commercial, or other organizational use." http://www.mathworks.com/academia/student_version/?s_tid=tb_sv

So, for my home/hobby hacks, I can't use Matlab. I have to use Python+NumPy+SciPy+Matplotlib. This python-based stack isn't nearly as nice as Matlab, but it gets the job done.

I use the same tools as well, NumPy and Matplotlib. I typically use the University Engineering Lab computers or download new 30 day trials.