I was recently putting together a lecture on protocols when an interesting question popped in my head: Why do I constantly use 9600 bits per second to communicate with stuff? There are a huge number of systems that rely on asynchronous serial. Why did we end up using 9600 instead of 10,000, or perhaps 8192 (213) bps? It turns out we use 9600 bps because of the reaction time of carbon microphones.

Back in the dawn of computing, you needed a thing to attach your computer to a phone line. You needed an acoustic coupler!

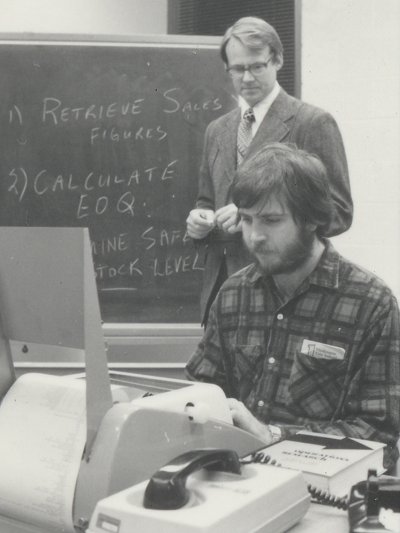

An early teletype connected to the phone network from UMSL's Grace's Place

An acoustic coupler has the same bits as the handset just in reverse. A telephone handset is placed in a cradle designed with rubber seals to fit snugly around the microphone and earpiece of the handset. The microphone part of the handset picks up sounds from the speaker in the cradle, and the earpiece in the handset broadcasts sounds to a microphone in the cradle. (Based on a description from UMSL's Grace's Place)

Once you had your computer beeping and blipping over the phone lines you could transmit data. But, for those of us who remember phone lines, the audio was often filled with static, making it difficult to understand the person talking. Computers had similar issues:

-> From Wikimedia <-

From retrocomputing on stackexchange:

110 and 300 baud were chosen at the time primarily because both modems were intended to be used over copper wire and "unconditioned" telephone lines, with at least one part of the connection going through an acoustic coupler. The worst-case for acoustic couplers talking to carbon microphones is somewhere around 300 baud. Since this is a worst-case, this is what we get.

Microphones designed for human conversation in the 1950s and '60s were unable to react faster than 300 times a second, thus limiting reliable signal switching to 300 a second. It’s important to note that 300 baud is not 300 bps due to different encoding methods, but this seems to be the origin of the 300 divisor of modern serial communication rates.

Phone lines also had issues:

Bell 103 - Asynchronous data transmission, full-duplex operation over 2-wire dialup or leased lines; 300-bps data rate. Ideal for the "low demand" user who exchanges files infrequently with another PC user or an on-line bulletin board. Comparable to ITU V.21. This modulation is well suited for bad phone lines – as the communication guys use to say, "103 modems will work on barb wire." Source

I need to use more barbed wire in my projects!

The acoustic coupler origin story is similarly intriguing. Couplers were necessary only because AT&T was concerned about third-party devices bringing the phone grid down (and losing their monopoly), so they made electrically-connected devices illegal. Thus acoustic couplers were created to provide an air-isolated buffer between the phone network and your DIY, home-brew computer. Thankfully the FCC ruled in 1968 that directly-connected devices would be allowed, and communication speeds steadily increased.

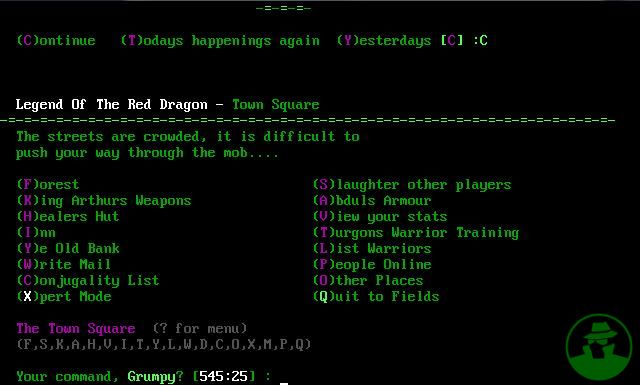

From gamespy

Now I’m going to go play Legend of the Red Dragon in tribute to my 14.4 kbps modem and the BBS I ran when I was in middle school (geeks don't die, they just change games).

Sorry Nate, not quite right. The 300 bps was not because the telephone carbon mics couldn’t handle higher frequencies but more a result of the properties of copper wire and human hearing. In fact, the mics were not designed to pass frequencies below about 300 Hz because human hearing falls off rapidly below this frequency and lower frequencies add little to the intelligibility of speech. Similarly, above about 3500 Hz., cable attenuation rises sharply and again, hearing acuity drops off. So for decades, telephone systems were optimized for 300-3500 Hz. For this reason, the 300 bps modems used frequency shift keying at frequencies between 1070 and 2225 Hz. The bit rate limitation was mostly a result of the time it took to reliably detect the frequency shifts with the technology of that time. When the modem spec was released in 1962, the mainstream technology was discrete transistors (still a lot of germanium): no ICs, no microprocessors. I don't recall exactly, but the early modems might have even used LC filters. The UART (called a distributor in those days) in the Teletype Model 33, which was the workhorse as your photo suggests, was a mechanical affair with one electromagnet and some clutches and gears. It was limited to about 110 bps, so there wasn’t much economic incentive to have higher speed modems.

You don't explicitly draw the connection, but I assume 9600 was chosen because it's 32 * 300? Likewise, 38400 = 300 * 128, and 57600 = 300 * 192.

Also, LoRD lives on, in the form of legend of the green dragon, and it's just as fun as you remember.

Actually, it has to do with encoding. Up to 2400bps the baud rate and bit rate were equal. After that, you got increases by encoding multiple bits per symbol. 9600bps was four bits per symbol at 2400 baud. 14.4kps, 28.8kbps, and 33.6kbs were encoded on a 3429buad symbol stream.

You're correct. Sorry it wasn't clear! Once 300 became the standard multiple, 9600bps ended up being a sweet spot of processing power and reliability for the machines that needed to communicate during the 1980s and 1990s. At some point the number of devices that supported 9600bps became a tipping point. Today, where processing power is less of a concern, the momentum of the sheer number of devices that communicate at 9600bps (and 57600 and 115200) now dictate the further device support at these serial comm rates.

Oh, and LoGD looks great!

There is also the limitation of how fast the RS232 interface can operate. While this depends on the length of the cable, 115300 is pushing it except for short distances, so most systems max out about there. RS422 can go faster, and I do recall an experimental fiber optic terminal interface back in the 70's at Digital that pushed something like 1 mega baud. That used a highly modified VT100 terminal BTW.

In the day of the «glass-TTYs» the maximum baud rate supported was 19200. This was the practical maximum for devices such as the 8251A used in VT-100s and several others up through the 1980s. I did some investigations as to why that was, having these niftly little Picotux devices that ran their serial port at 38400.

I remember needing to upgrade 8251's in the mid 80's to 16450's and later 16550's to get more speed!

Cool! Now I know why my pre-OBI II GM fuel injection communicates at 8192 baud!!

Thanks!

My question isn't "Why did we end up using 9600 instead of 10,000, or perhaps 8192 (213) bps?", but "Why do we still use 9600 as a default for modern serial devices like GPS receivers and beginner Arduino demos? Why not a higher 'standard' rate like 19.2k, 57.6k, or 115.2k?"

I dont think this is entirely true.. Saying that "The worst-case for acoustic couplers talking to carbon microphones is somewhere around 300 baud" can only be true when you take into account several other factors, such as acceptable error rate (what is "worst case" based on? one error bit out of how many???) and also, saying it's how fast the carbon mic can react ignores the fact that it's using FSK and the actual tone being sent is between 1070hz and 2225hz depending on whos the initator/answerer, and if it's a 0 or 1 being sent. (https://en.wikipedia.org/wiki/Bell_103_modem).. In the 80s, we were sometimes able to get these couplers to run at 150% rate (450 baud) depending on the quality of the phone line (some of my friends were out of the same telco CO, so better quality between us) so this isn't telling the whole story. The frequencies chosen for the FSK had to take into account that the 4 tones needed (for each side to have separate frequency for 0 and 1), and make sure each tone fell within the range the phone system was created to convey ( I believe 300-3300 hz) and that the frequencies weren't going to be related in frequency in such a way that they could cancel each other out or otherwise be confused for one another by the PLL circuitry on the other end that had to decode it. Once optimal frequencies were determined, I'd bet that the speed of a bit (and therefore BPS) had more to do with how long it took the PLL to lock on and detect the bit, while still having over 1/2 bit time to actually convey the correct bit to the attached computer. At 2225hz, with 300 BPS, that's just over 7 audible sine waves per bit. That seems reasonable, considering it probably needs 2 cycles to lock.. I have no hard evidence that this had anything to do with design, but from years of playing with 300 baud modems and the physics of sound, I'm betting this is what was boiled down to "how fast a carbon mic can react"

Ah yes, the good old days. :) I have an ASR-33 in my basement. It is one of the ones with the built in 110Baud modem in a 1 foot square 6" wide aluminum box in back of the pedestal. I go back to 100 and 300 baud acoustic coupled modems as well. (Not to mention Baudot.) I remember the jumps to 1200 (Bell 212A), 9600, 14.4K, 33.6 and the biggy: 56K.... Thought we were on the top of the wold if you could connect at 56K! But then MaBell would throw us curves. All goes back to the implementation of their digital carrier system, and the still-in-use-to-this-day T1. A T1 channel is properly a 64kbps digital channel into which the analog voice/signal was digitized. This was just enough to support the 56K audio bandwidth on a good day. However a bunch of folks wound up never connecting at more then 33.6 no matter what they did. This was due to either bit-robbed T1 carrier in their audio path, which was only a 58Kbps channel - or what MaBell used to install if they were short of copper pairs to a location: A analog/digital carrier, so they could get 2 POTS lines from one copper pair. It also ran with 58Kbps audio channels. So either set up precluded getting anything faster then 33.6. It became some folks hole grail quest to get this phone lines rebuilt to support 56K. Ah the good old days!

Nate, not to be too pedantic, but the thing in the first picture is a teletype like the ASR- or KSR-33, not an early computer. The explanation of the acoustic coupler is all kinds of confused; the handset doesn't act any differently just because it's in a coupler. The headset mouthpiece is a microphone, its earpiece a speaker. The coupler is the opposite. HTH.

Whew that was bad editing on my part. I glanced over the UMSL's description too quickly. It was really confusing.

I've fixed the caption and re-wrote the cradle description. Hopefully a little more straight forward now. Thanks!

Such fond memories, I occasionally catch myself throating the sound of a dial-up connecting when I play with RS232. Speaking of LoRD I preferred Trade Wars and Lunatic Fringe on my hometown BBS I can still hear the curses of my parents when they got the phone bill.

In high school in the 70's, we had teletypes with acoustic modems that connected to a minicomputer shared by all high schools in the city. When we wanted to irk the person using the teletype (often because his turn was up and he wasn't relinquishing the terminal), we'd jingle our car keys on the handset, introducing noise on the line to mess up his session. Fun times!

I'm having flashbacks to the days of running a 300bps modem on my Commodore 64. My modem, which plugged into a slot on the back of the C64, was slightly more advanced than an acoustic coupler. I had to dial the number manually, then unplug the handset and connect its wire to the modem when I heard the answering carrier. You could read the text from the BBS as quickly as it appeared. Even Q-Link (AOL before it was AOL) was barely usable.