×

SparkFun will be closed on Tuesday, December 24th, and Wednesday, December 25th, in observance of the Christmas holiday. Any orders qualifying for same day shipping placed after 2:00 p.m. (MST) on Monday, December 23rd, will be processed on Thursday, December 26th, when we return to regular business hours. Wishing you a safe and happy holiday from all of us at SparkFun!

Please note - we will not be available for Local Pick up orders from December 24th-December 27th. If you place an order for Local Pick-Up we will have those ready on Monday, December 30th.

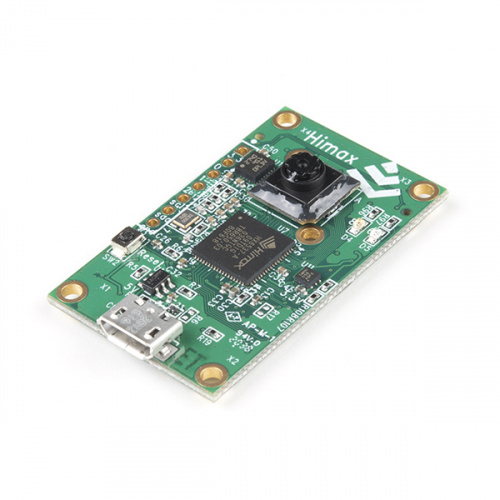

Back in November, SparkFun began carrying the Himax WE-I Plus EVB Endpoint AI Development Board. In December, our friends at Edge Impulse let us know that they were supporting this board - so what we already thought was pretty awesome became even better.

The Himax WE-I Plus EVB Endpoint AI Development Board

The WE-I Plus EVB is the perfect solution for computer/machine vision or AI endpoint. It is a versatile board with a low-power monochrome camera, a microphone and an accelerometer. The built-in WE-I Plus ASIC (HX6537-A) combines a Synopsys ARC EM9D DSP running at 400 MHz and 2 MB internal SRAM and 2 MB Flash. This chip is fast - you can classify a single image at 96x96 pixels in just over 100 ms.

WE-I Plus EVB with Edge Impulse

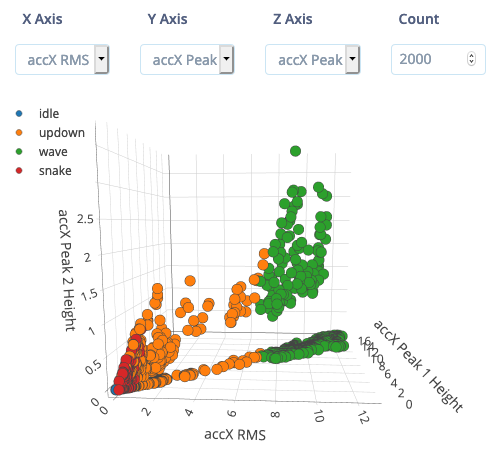

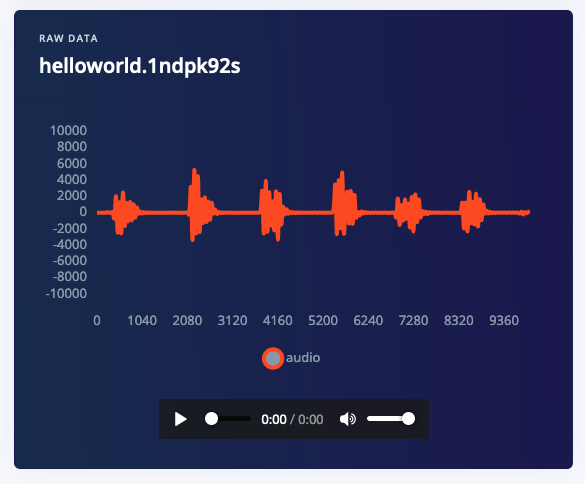

Thanks to the integration with Edge Impulse developers, engineers, researchers, hobbyists and students (and anyone really) can now quickly collect real-world sensor data, train ML models on this data in the cloud, and then deploy the model back to your WE-I Plus board. From there you can integrate the model into your applications with a single function call. Your sensors are then a whole lot smarter, being able to make sense of complex events in the real world. The built-in examples allow you to collect data from the accelerometer, the microphone and the camera. The images below provide snapshots of gesture recognition and audio classification, respectively.

By using Edge Impulse and Himax, one can generate and export models directly to the devices with a single button press, and in seconds, not days. These resulting inferences fully utilize the latest neural networks and Edge Impulse EON™ technology to ensure that they run as fast and energy-efficient as possible.

Learn More On Your Own or Join the Webinar on January 18th

Webinar: The AI Vision and Sensor Fusion

Himax and Edge Impulse are hosting a webinar next week! The title of the webinar is "The AI Vision and Sensor Fusion," and it will start at 8 a.m. PDT on Monday, January 18th. To sign up, visit the registration page.

Learning More On Your Own

Edge Impulse has put together comprehensive documentation to guide users through connecting to Edge Impulse, building machine learning models, and troubleshooting. The tutorials for building machine learning models include using your microcontroller to:

* Add sight to your sensors - build a system that can recognize objects through a camera (image classification)

* Build a continuous motion recognition system - build a gesture recognition system

* Recognize sounds from audio - recognize when a particular sound is happening (audio classification)

* Respond to your voice - recognize audible events, particularly your voice (audio classification)

Please let us know your experience with the WE-I Plus EVB and Edge Impulse.