The SparkFun RP2040 Thing Plus is awfully enticing to use for machine learning...not only does it have 16 MB of flash memory, but the RP2040 SoC enables the maximum performance for machine learning inference at the lowest power, due to its energy-efficient dual Arm Cortex-M0+ cores working at a comparatively higher frequency of 133 MHz.

Since a version of the TensorFlow Lite Micro library has been ported for the Raspberry Pi Pico, we can try to start running machine learning models on RP2040 boards that can detect people in images, or recognize gestures and voices. But beyond that, TensorFlow has documentation for building really useful text recognition machine learning models, including the BERT Question Answer model.

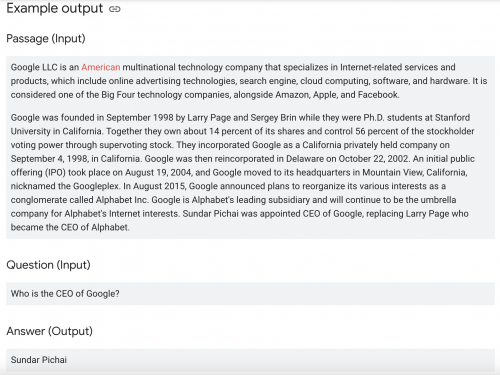

Even if you haven't heard of the BERT Question Answer model, it's likely that you've either interacted with systems that follow the same principles. It's what allows machines to read and comprehend human language and interact with us in return. Developed by Google, it stands for Bidirectional Encoder Representations from Transformers, which basically means it uses encoders and decoders to read text input and produce predictions. The bidirectional part means that it reads the text from both left to right and right to left, so that it can understand the context of a word within its text. Basically, it's the key to building machines that can actually communicate with us, like the chat bots you interact with on the web. For example, you can see how the model might be able to pick out an answer from the passage below.

The question is, can it be converted onto a microcontroller like the RP2040 Thing Plus, which has limited RAM and storage and thus places constraints on size of the machine learning model? We attempted this, by training a model through TensorFlow, and then converting it to C files that could be loaded onto the RP2040 Thing Plus and thus fed new data.

Training the Model

TensorFlow has extensive documentation that leads you through training the model, but the amount of code required is surprisingly simple. It comes down to five main steps: choosing the model (in this case it's MobileBert, since it’s thin and compact for resource-limited microcontroller use), loading in data, retraining the model with the given data, evaluating it, and exporting it to TensorFlow Lite format (tflite).

# Chooses a model specification that represents the model.

spec = model_spec.get('mobilebert_qa')

# Gets the training data and validation data.

train_data = QuestionAnswerDataLoader.from_squad(train_data_path, spec, is_training=True)

validation_data = QuestionAnswerDataLoader.from_squad(validation_data_path, spec, is_training=False)

# Fine-tunes the model.

model = question_answer.create(train_data, model_spec=spec)

# Gets the evaluation result.

metric = model.evaluate(validation_data)

# Exports the model to the TensorFlow Lite format with metadata in the export directory.

model.export(export_dir)

The data we'll be giving it comes from a large scale dataset meant to train machine reading comprehension called TriviaQA.

Once we export the model to a file with the format tflite, we'll need to convert the model to a C array such that it can run on a microcontroller using xxd.

xxd -i converted_model.tflite > model_data.cc

Running Inference on the RP2040 Thing Plus

Running inference on a device basically means loading and testing the model onto the microcontroller. TensorFlow does have documentation for running inference on microcontrollers, and includes many steps, including loading the library headers, model headers, loading the module, allocating memory, and testing the model/retraining with new data that it hasn't seen before.

#include "pico/stdlib.h"

#include "tensorflow/lite/micro/all_ops_resolver.h"

#include "tensorflow/lite/micro/micro_error_reporter.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"

#include "conv_bert_quant.h"

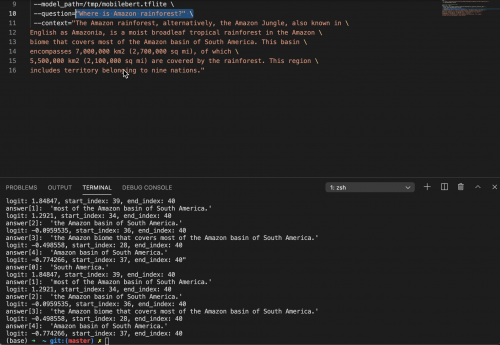

Lastly, we can run the model with an input, like the passage below, and see in the console what kind of answers the model produces to comprehend the text.

Final Thoughts

It's quite amazing that a microcontroller can run a machine learning model like BERT, but thanks to TensorFlow, it's possible to run all sorts of machine learning modules. What kind of machine learning applications interest you? I highly reccomend giving them a try on one of the RP2040 boards because they are so well equipped for this kind of heavy lifting. Comment below what you want to try, and happy hacking!

Google also using the same thing to provide useful information to the users